444 Alaska Avenue

Suite #BAA205 Torrance, CA 90503 USA

+1 424 999 9627

24/7 Customer Support

sales@markwideresearch.com

Email us at

Suite #BAA205 Torrance, CA 90503 USA

24/7 Customer Support

Email us at

Corporate User License

Unlimited User Access, Post-Sale Support, Free Updates, Reports in English & Major Languages, and more

$3450

The sensor landscape in robotics and ADAS vehicle market represents a transformative technological ecosystem that is fundamentally reshaping autonomous systems across multiple industries. Advanced sensor technologies including LiDAR, radar, cameras, ultrasonic sensors, and inertial measurement units are driving unprecedented innovation in both robotic applications and Advanced Driver Assistance Systems (ADAS). The market demonstrates remarkable growth momentum, with sensor integration expanding at a CAGR of 12.8% as manufacturers increasingly prioritize safety, efficiency, and autonomous capabilities.

Technological convergence between robotics and automotive sectors has created synergistic opportunities for sensor manufacturers, enabling cross-platform innovations and economies of scale. The integration of artificial intelligence and machine learning algorithms with advanced sensor arrays has unlocked new possibilities for real-time decision-making, environmental perception, and predictive analytics. Market adoption is accelerating across diverse applications, from industrial automation and warehouse robotics to semi-autonomous vehicles and fully autonomous driving systems.

Regional dynamics show particularly strong growth in North America and Asia-Pacific, where automotive manufacturers and technology companies are investing heavily in sensor research and development. The market benefits from increasing regulatory support for ADAS technologies and growing consumer acceptance of automated systems. Sensor fusion technologies are becoming increasingly sophisticated, combining multiple sensor types to create comprehensive environmental awareness systems that exceed the capabilities of individual sensor technologies.

The sensor landscape in robotics and ADAS vehicle market refers to the comprehensive ecosystem of sensing technologies, hardware components, software integration platforms, and data processing systems that enable autonomous and semi-autonomous operations in robotic systems and advanced driver assistance applications. This market encompasses the development, manufacturing, and deployment of various sensor types including optical sensors, electromagnetic sensors, mechanical sensors, and hybrid sensing solutions designed specifically for autonomous navigation, object detection, environmental mapping, and safety monitoring.

Core technologies within this landscape include Light Detection and Ranging (LiDAR) systems for precise distance measurement and 3D mapping, radar sensors for weather-resistant detection capabilities, high-resolution cameras for visual recognition and classification, ultrasonic sensors for close-proximity detection, and inertial measurement units for motion tracking and stabilization. The market also encompasses the supporting infrastructure including sensor fusion algorithms, edge computing platforms, and communication protocols that enable seamless integration and real-time data processing across multiple sensor modalities.

Market transformation in the sensor landscape for robotics and ADAS vehicles is being driven by converging technological advances, regulatory requirements, and evolving consumer expectations for automated systems. The sector demonstrates robust growth potential with sensor adoption rates increasing by 34% annually across key application segments. Innovation cycles are accelerating as manufacturers develop increasingly sophisticated sensor arrays capable of operating in diverse environmental conditions while maintaining high accuracy and reliability standards.

Competitive dynamics are intensifying as traditional automotive suppliers, technology companies, and specialized sensor manufacturers compete for market share in this rapidly expanding sector. Strategic partnerships between sensor manufacturers, automotive OEMs, and robotics companies are becoming increasingly common, enabling faster technology development and market penetration. The market benefits from significant investment in research and development, with companies allocating substantial resources to next-generation sensor technologies including solid-state LiDAR, advanced radar systems, and AI-enhanced camera technologies.

Market segmentation reveals diverse opportunities across multiple application areas, with automotive ADAS applications currently representing the largest segment, followed by industrial robotics, service robotics, and autonomous vehicles. Technology integration trends show increasing emphasis on multi-sensor fusion approaches that combine complementary sensing technologies to achieve superior performance compared to single-sensor solutions.

Technological evolution in the sensor landscape is characterized by several key trends that are reshaping the market dynamics and creating new opportunities for innovation and growth:

Regulatory mandates are serving as a primary catalyst for market growth, with government agencies worldwide implementing increasingly stringent safety requirements for both automotive and industrial applications. Safety regulations in key markets are mandating the inclusion of specific ADAS technologies, creating guaranteed demand for sensor systems. The European Union’s General Safety Regulation and similar initiatives in other regions are establishing minimum requirements for automated emergency braking, lane departure warning, and other sensor-dependent safety systems.

Consumer demand for enhanced safety features and autonomous capabilities is driving automotive manufacturers to incorporate advanced sensor technologies as standard equipment rather than optional features. Market research indicates that 78% of consumers consider advanced safety features a critical factor in vehicle purchasing decisions. The growing awareness of traffic safety benefits and the potential for reduced insurance costs are further accelerating consumer acceptance of sensor-equipped vehicles.

Industrial automation trends are creating substantial demand for robotic systems equipped with sophisticated sensor arrays. Manufacturing efficiency requirements and labor shortage concerns are driving companies to invest in automated solutions that rely heavily on advanced sensing technologies. The Industry 4.0 movement is particularly influential, as smart manufacturing initiatives require extensive sensor integration for real-time monitoring, quality control, and predictive maintenance applications.

Technological maturation of key sensor technologies has reached a point where performance, reliability, and cost considerations align with market requirements. LiDAR technology has achieved significant cost reductions while improving range and accuracy, making it viable for broader commercial applications. Similarly, advances in radar and camera technologies have enhanced their capabilities while reducing power consumption and manufacturing costs.

High implementation costs continue to present significant barriers to market adoption, particularly for small and medium-sized enterprises considering robotic automation or fleet operators evaluating ADAS upgrades. Capital investment requirements for comprehensive sensor systems can be substantial, especially when considering the need for supporting infrastructure, training, and maintenance capabilities. The total cost of ownership calculations often extend beyond initial hardware costs to include ongoing calibration, software updates, and potential replacement requirements.

Technical complexity associated with sensor integration and calibration presents ongoing challenges for manufacturers and end-users. System integration requires specialized expertise and sophisticated testing procedures to ensure optimal performance across diverse operating conditions. The need for precise calibration and alignment of multiple sensor types adds complexity to manufacturing processes and field maintenance requirements.

Regulatory uncertainty in some markets creates hesitation among potential adopters who are concerned about future compliance requirements and potential technology obsolescence. Standards development is still evolving in many regions, creating uncertainty about long-term compatibility and certification requirements. The lack of harmonized international standards can complicate global deployment strategies for multinational companies.

Environmental limitations of certain sensor technologies continue to restrict their applicability in specific conditions. Weather sensitivity affects the performance of optical sensors, while electromagnetic interference can impact radar systems. These limitations require careful consideration of sensor selection and deployment strategies, potentially increasing system complexity and costs.

Emerging applications in autonomous delivery systems, agricultural robotics, and smart city infrastructure are creating substantial new market opportunities for sensor manufacturers. Last-mile delivery solutions are driving demand for compact, cost-effective sensor systems capable of navigating complex urban environments. The growing focus on sustainable agriculture is creating opportunities for precision farming applications that rely heavily on advanced sensing technologies for crop monitoring, soil analysis, and automated harvesting systems.

Technology convergence opportunities exist at the intersection of robotics and automotive applications, where shared sensor platforms can achieve economies of scale while serving multiple market segments. Cross-platform compatibility initiatives are enabling sensor manufacturers to develop versatile solutions that can be adapted for various applications with minimal modification. This approach reduces development costs while expanding addressable market opportunities.

Aftermarket opportunities are expanding as existing vehicle fleets and industrial equipment can be retrofitted with advanced sensor systems. Upgrade solutions for legacy systems represent a significant market opportunity, particularly in commercial vehicle fleets where operators seek to improve safety and efficiency without replacing entire vehicle platforms. The retrofit market is expected to grow substantially as sensor costs continue to decline and installation procedures become more standardized.

International expansion opportunities are emerging in developing markets where infrastructure development and industrialization are driving demand for automated systems. Market penetration in Asia-Pacific, Latin America, and other emerging regions offers substantial growth potential as local manufacturing capabilities expand and regulatory frameworks mature.

Competitive intensity is increasing as the market attracts participants from diverse industry backgrounds, including traditional automotive suppliers, technology companies, semiconductor manufacturers, and specialized sensor developers. Market consolidation trends are evident as larger companies acquire specialized sensor technologies and capabilities to build comprehensive solution portfolios. Strategic acquisitions are enabling rapid technology integration and market expansion while providing smaller companies with resources for scaled manufacturing and global distribution.

Innovation cycles are accelerating due to intense competition and rapid technological advancement. Research and development investments are focusing on next-generation technologies including solid-state sensors, quantum sensing applications, and bio-inspired sensing systems. The pace of innovation is driving shorter product lifecycles and requiring companies to maintain continuous development programs to remain competitive.

Supply chain dynamics are evolving as sensor manufacturers work to establish resilient, geographically diverse production capabilities. Manufacturing localization initiatives are gaining importance as companies seek to reduce supply chain risks and meet local content requirements in key markets. The semiconductor shortage experienced in recent years has highlighted the importance of supply chain resilience and diversification strategies.

Partnership ecosystems are becoming increasingly important as no single company possesses all the capabilities required for comprehensive sensor solutions. Collaborative development programs between sensor manufacturers, software companies, and system integrators are enabling faster innovation and more complete solution offerings. These partnerships are particularly important for developing sensor fusion capabilities and AI-enhanced processing systems.

Comprehensive market analysis for the sensor landscape in robotics and ADAS vehicles requires a multi-faceted research approach that combines primary and secondary research methodologies. Primary research activities include extensive interviews with industry executives, technology developers, and end-users across key market segments. These interviews provide insights into market trends, technology developments, competitive dynamics, and future growth opportunities that are not readily available through secondary sources.

Secondary research encompasses analysis of industry reports, patent filings, regulatory documents, and company financial statements to understand market structure, technology trends, and competitive positioning. Market data is collected from multiple sources including industry associations, government agencies, and specialized research organizations to ensure comprehensive coverage and data validation. MarkWide Research employs rigorous data verification processes to ensure accuracy and reliability of market insights and projections.

Technology assessment methodologies include detailed analysis of sensor specifications, performance benchmarks, and application suitability across different use cases. Competitive analysis involves evaluation of company capabilities, product portfolios, market positioning, and strategic initiatives. Market sizing and forecasting utilize multiple analytical approaches including bottom-up analysis based on application segments and top-down analysis based on market drivers and adoption trends.

Regional analysis incorporates consideration of local market conditions, regulatory environments, competitive landscapes, and technology adoption patterns. Validation processes include cross-referencing data from multiple sources, expert review panels, and market participant feedback to ensure accuracy and completeness of research findings.

North America maintains a leadership position in the sensor landscape market, driven by strong automotive industry presence, advanced technology development capabilities, and supportive regulatory environment. The region accounts for approximately 38% of global market activity, with the United States serving as a primary hub for sensor innovation and manufacturing. Silicon Valley technology companies are particularly active in developing AI-enhanced sensor systems and autonomous vehicle technologies. The region benefits from substantial venture capital investment and government research funding for autonomous systems development.

Europe represents a significant market segment with strong emphasis on automotive safety regulations and industrial automation. German automotive manufacturers are leading the adoption of advanced sensor technologies in premium vehicle segments, while Scandinavian countries are pioneering applications in harsh weather conditions. The region’s market share stands at approximately 32%, with growth driven by stringent safety regulations and environmental sustainability initiatives. European Union regulatory frameworks are establishing global standards for sensor performance and safety requirements.

Asia-Pacific demonstrates the highest growth rates in sensor adoption, with China, Japan, and South Korea leading technology development and manufacturing capabilities. The region’s market share is rapidly expanding, currently representing 25% of global activity with projected growth acceleration. Chinese manufacturers are making significant investments in sensor technology development and production capacity, while Japanese companies continue to lead in precision sensor manufacturing and miniaturization technologies.

Emerging markets in Latin America, Middle East, and Africa are showing increasing interest in sensor technologies, particularly for commercial vehicle applications and industrial automation. These regions represent growing opportunities as infrastructure development and industrialization drive demand for automated systems equipped with advanced sensing capabilities.

Market leadership is distributed among several categories of companies, each bringing distinct capabilities and competitive advantages to the sensor landscape ecosystem:

Competitive strategies vary significantly across market participants, with some companies focusing on technology leadership and innovation while others emphasize cost optimization and manufacturing scale. Vertical integration strategies are becoming more common as companies seek to control key technology components and reduce supply chain dependencies.

Technology-based segmentation reveals distinct market dynamics across different sensor types, each serving specific applications and performance requirements:

By Sensor Type:

By Application Segment:

Automotive ADAS applications currently dominate market demand, representing the largest segment with established regulatory requirements and consumer acceptance. Safety-critical applications in this category require the highest reliability and performance standards, driving premium pricing and substantial R&D investment. The segment benefits from mandatory installation requirements in many markets and growing consumer awareness of safety benefits.

Industrial robotics represents a rapidly growing segment with diverse application requirements ranging from precision assembly to heavy material handling. Sensor requirements vary significantly based on application, with some requiring extreme precision while others prioritize durability and environmental resistance. The segment is characterized by longer product lifecycles and higher customization requirements compared to automotive applications.

Autonomous vehicle applications represent the highest-growth potential segment, though commercial deployment remains limited. Technology requirements are more demanding than ADAS applications, requiring comprehensive 360-degree environmental awareness and real-time processing capabilities. The segment is driving development of next-generation sensor technologies including solid-state LiDAR and advanced sensor fusion systems.

Service robotics applications are expanding rapidly in consumer and commercial markets, with cost sensitivity being a primary consideration. Sensor solutions for this segment emphasize cost optimization while maintaining adequate performance for specific applications. The segment is benefiting from technology spillover from automotive and industrial applications, enabling cost-effective solutions for broader market adoption.

Automotive manufacturers benefit from enhanced product differentiation, improved safety ratings, and compliance with regulatory requirements through advanced sensor integration. Competitive advantages include reduced liability exposure, enhanced brand reputation, and access to new market segments focused on safety and automation. The integration of advanced sensor systems enables manufacturers to command premium pricing while meeting evolving consumer expectations for safety and convenience features.

Technology companies gain access to large-scale markets with substantial growth potential and opportunities for platform-based solutions that can serve multiple application segments. Revenue diversification opportunities exist as sensor technologies developed for one application can often be adapted for other markets with minimal modification. The sector offers opportunities for recurring revenue through software updates, calibration services, and data analytics services.

End-users across various industries benefit from improved safety, operational efficiency, and reduced labor costs through sensor-enabled automation. Return on investment is achieved through reduced accident rates, improved productivity, and lower insurance costs. Operational benefits include 24/7 operation capabilities, consistent performance quality, and reduced human error rates in critical applications.

Regulatory agencies benefit from improved compliance monitoring capabilities and enhanced public safety outcomes through widespread sensor adoption. Data collection opportunities enable better understanding of traffic patterns, accident causation, and system performance for future regulatory development. The technology enables more effective enforcement of safety regulations and provides objective data for policy development.

Strengths:

Weaknesses:

Opportunities:

Threats:

Sensor fusion evolution is transforming the market landscape as manufacturers develop increasingly sophisticated systems that combine multiple sensor types to achieve superior performance compared to individual sensors. AI-enhanced processing capabilities are enabling real-time analysis of complex sensor data streams, improving accuracy and reducing false positive rates. The trend toward edge computing integration is reducing latency and bandwidth requirements while enabling more responsive autonomous systems.

Miniaturization trends continue to drive sensor development, with manufacturers achieving significant size and weight reductions without compromising performance. Solid-state technologies are replacing mechanical components in LiDAR and other sensor types, improving reliability while reducing manufacturing costs. The development of integrated sensor packages that combine multiple sensing modalities in single units is simplifying installation and reducing system complexity.

Cost optimization initiatives are making advanced sensor technologies accessible to broader market segments. Manufacturing innovations including automated production processes and new materials are driving down unit costs while maintaining quality standards. The trend toward standardized interfaces and plug-and-play compatibility is reducing integration costs and complexity for end-users.

Sustainability considerations are influencing sensor design and manufacturing processes, with companies developing more energy-efficient systems and implementing circular economy principles. Lifecycle management programs are addressing end-of-life considerations and promoting component recycling and reuse opportunities.

Strategic partnerships between automotive manufacturers and technology companies are accelerating sensor development and deployment. Recent collaborations include joint development programs for next-generation LiDAR systems and AI-enhanced camera technologies. These partnerships are enabling faster technology transfer and reducing time-to-market for innovative sensor solutions.

Regulatory developments continue to shape market dynamics, with new safety standards and testing protocols being implemented across major markets. Certification processes are becoming more standardized, reducing compliance costs and enabling global product deployment strategies. The development of performance benchmarks is providing clearer guidance for technology development and market positioning.

Manufacturing capacity expansion is occurring across multiple regions as companies invest in production capabilities to meet growing demand. Localization initiatives are establishing regional manufacturing centers to serve local markets and reduce supply chain risks. Advanced manufacturing technologies including automated assembly and quality control systems are improving production efficiency and product consistency.

Technology breakthroughs in areas such as quantum sensing, bio-inspired sensors, and advanced materials are opening new possibilities for sensor performance and applications. Research initiatives at leading universities and corporate laboratories are exploring next-generation sensing technologies that could transform market dynamics in the coming decade.

Investment priorities should focus on sensor fusion technologies and AI-enhanced processing capabilities, as these areas offer the greatest potential for differentiation and value creation. MarkWide Research analysis indicates that companies investing in comprehensive sensor platforms rather than individual sensor types are achieving superior market positioning and growth rates. Technology integration capabilities are becoming more important than individual sensor performance as customers seek complete solutions rather than component products.

Market entry strategies should consider partnership approaches rather than independent development, particularly for companies without extensive sensor experience. Collaborative development programs can provide access to established distribution channels and customer relationships while sharing development risks and costs. Acquisition strategies may be appropriate for companies seeking to rapidly build sensor capabilities and market presence.

Geographic expansion should prioritize markets with supportive regulatory environments and established automotive or industrial automation sectors. Emerging markets offer substantial growth potential but require careful consideration of local requirements, competitive dynamics, and infrastructure capabilities. Regional partnerships can provide valuable market knowledge and distribution capabilities for international expansion initiatives.

Technology development should emphasize cost reduction and performance improvement in parallel, as market success requires both technical excellence and commercial viability. Manufacturing scalability should be considered from early development stages to ensure smooth transition from prototype to commercial production. Standardization compliance should be prioritized to ensure broad market compatibility and reduce integration barriers.

Market evolution over the next decade will be characterized by continued technology advancement, expanding applications, and increasing integration complexity. Growth projections indicate sustained expansion at CAGR rates exceeding 15% as sensor technologies become essential components of autonomous systems across multiple industries. Technology convergence between different sensor types and AI processing capabilities will create new performance benchmarks and application possibilities.

Autonomous vehicle deployment will serve as a primary growth driver, though commercial rollout timelines remain uncertain due to regulatory and technical challenges. ADAS expansion will continue to provide steady market growth as safety features become standard equipment across vehicle segments. Industrial automation applications will expand beyond traditional manufacturing to include logistics, agriculture, and service sectors.

Technology trends will focus on improved environmental resilience, reduced power consumption, and enhanced AI integration capabilities. Next-generation sensors will incorporate self-diagnostic capabilities, predictive maintenance features, and adaptive performance optimization. Quantum sensing technologies may emerge as commercial solutions for specialized applications requiring extreme precision and sensitivity.

Market consolidation is expected to continue as larger companies acquire specialized technologies and smaller companies seek resources for scaled manufacturing and global distribution. Platform-based approaches will become more common as companies develop comprehensive sensor ecosystems rather than individual products. Sustainability requirements will increasingly influence technology development and manufacturing processes, driving innovation in materials and lifecycle management approaches.

The sensor landscape in robotics and ADAS vehicle market represents a dynamic and rapidly evolving ecosystem that is fundamental to the advancement of autonomous systems across multiple industries. Market growth is being driven by converging factors including regulatory requirements, technological maturation, and increasing consumer acceptance of automated systems. The sector demonstrates strong fundamentals with diverse application opportunities and substantial investment in research and development.

Competitive dynamics are intensifying as traditional suppliers, technology companies, and specialized manufacturers compete for market share in this expanding sector. Success factors include technology integration capabilities, manufacturing scale, and the ability to serve multiple market segments with platform-based solutions. Strategic partnerships and collaborative development programs are becoming essential for companies seeking to build comprehensive sensor capabilities and market presence.

Future prospects remain highly positive despite near-term challenges related to technology complexity, cost considerations, and regulatory uncertainty. Long-term growth will be supported by expanding applications in autonomous vehicles, industrial automation, and emerging robotics segments. The continued evolution of AI and machine learning technologies will enhance sensor capabilities while creating new opportunities for value-added services and solutions. Market participants that successfully navigate the current technology transition period while building scalable, cost-effective solutions will be well-positioned to capitalize on the substantial growth opportunities ahead.

What is Sensor Landscape in Robotics and ADAS Vehicle?

The Sensor Landscape in Robotics and ADAS Vehicle refers to the various types of sensors used in robotic systems and advanced driver-assistance systems (ADAS) to enhance functionality, safety, and automation. This includes technologies such as LiDAR, radar, cameras, and ultrasonic sensors that enable perception and decision-making in vehicles.

What are the key companies in the Sensor Landscape in Robotics and ADAS Vehicle Market?

Key companies in the Sensor Landscape in Robotics and ADAS Vehicle Market include Bosch, Continental, and Denso, which are known for their innovative sensor technologies and solutions for automotive applications, among others.

What are the growth factors driving the Sensor Landscape in Robotics and ADAS Vehicle Market?

The growth of the Sensor Landscape in Robotics and ADAS Vehicle Market is driven by increasing demand for vehicle safety features, advancements in sensor technology, and the rise of autonomous vehicles. These factors contribute to enhanced driving experiences and improved road safety.

What challenges does the Sensor Landscape in Robotics and ADAS Vehicle Market face?

Challenges in the Sensor Landscape in Robotics and ADAS Vehicle Market include high development costs, integration complexities, and regulatory hurdles. These factors can hinder the widespread adoption of advanced sensor technologies in vehicles.

What future opportunities exist in the Sensor Landscape in Robotics and ADAS Vehicle Market?

Future opportunities in the Sensor Landscape in Robotics and ADAS Vehicle Market include the development of more sophisticated sensor systems, increased collaboration between tech companies and automakers, and the expansion of smart city initiatives that integrate vehicle sensors with urban infrastructure.

What trends are shaping the Sensor Landscape in Robotics and ADAS Vehicle Market?

Trends shaping the Sensor Landscape in Robotics and ADAS Vehicle Market include the growing use of artificial intelligence for sensor data processing, the shift towards electric and autonomous vehicles, and the increasing focus on vehicle-to-everything (V2X) communication technologies.

Sensor Landscape in Robotics and ADAS Vehicle Market

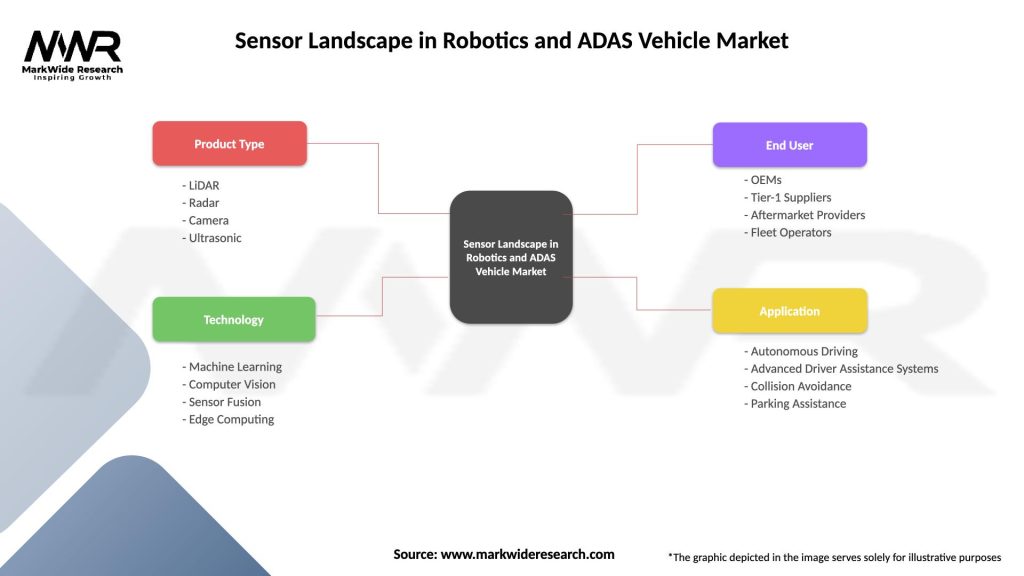

| Segmentation Details | Description |

|---|---|

| Product Type | LiDAR, Radar, Camera, Ultrasonic |

| Technology | Machine Learning, Computer Vision, Sensor Fusion, Edge Computing |

| End User | OEMs, Tier-1 Suppliers, Aftermarket Providers, Fleet Operators |

| Application | Autonomous Driving, Advanced Driver Assistance Systems, Collision Avoidance, Parking Assistance |

Please note: The segmentation can be entirely customized to align with our client’s needs.

Leading companies in the Sensor Landscape in Robotics and ADAS Vehicle Market

Please note: This is a preliminary list; the final study will feature 18–20 leading companies in this market. The selection of companies in the final report can be customized based on our client’s specific requirements.

North America

o US

o Canada

o Mexico

Europe

o Germany

o Italy

o France

o UK

o Spain

o Denmark

o Sweden

o Austria

o Belgium

o Finland

o Turkey

o Poland

o Russia

o Greece

o Switzerland

o Netherlands

o Norway

o Portugal

o Rest of Europe

Asia Pacific

o China

o Japan

o India

o South Korea

o Indonesia

o Malaysia

o Kazakhstan

o Taiwan

o Vietnam

o Thailand

o Philippines

o Singapore

o Australia

o New Zealand

o Rest of Asia Pacific

South America

o Brazil

o Argentina

o Colombia

o Chile

o Peru

o Rest of South America

The Middle East & Africa

o Saudi Arabia

o UAE

o Qatar

o South Africa

o Israel

o Kuwait

o Oman

o North Africa

o West Africa

o Rest of MEA

Trusted by Global Leaders

Fortune 500 companies, SMEs, and top institutions rely on MWR’s insights to make informed decisions and drive growth.

ISO & IAF Certified

Our certifications reflect a commitment to accuracy, reliability, and high-quality market intelligence trusted worldwide.

Customized Insights

Every report is tailored to your business, offering actionable recommendations to boost growth and competitiveness.

Multi-Language Support

Final reports are delivered in English and major global languages including French, German, Spanish, Italian, Portuguese, Chinese, Japanese, Korean, Arabic, Russian, and more.

Unlimited User Access

Corporate License offers unrestricted access for your entire organization at no extra cost.

Free Company Inclusion

We add 3–4 extra companies of your choice for more relevant competitive analysis — free of charge.

Post-Sale Assistance

Dedicated account managers provide unlimited support, handling queries and customization even after delivery.

GET A FREE SAMPLE REPORT

This free sample study provides a complete overview of the report, including executive summary, market segments, competitive analysis, country level analysis and more.

ISO AND IAF CERTIFIED

GET A FREE SAMPLE REPORT

This free sample study provides a complete overview of the report, including executive summary, market segments, competitive analysis, country level analysis and more.

ISO AND IAF CERTIFIED

Suite #BAA205 Torrance, CA 90503 USA

24/7 Customer Support

Email us at