444 Alaska Avenue

Suite #BAA205 Torrance, CA 90503 USA

+1 424 999 9627

24/7 Customer Support

sales@markwideresearch.com

Email us at

Suite #BAA205 Torrance, CA 90503 USA

24/7 Customer Support

Email us at

Corporate User License

Unlimited User Access, Post-Sale Support, Free Updates, Reports in English & Major Languages, and more

$3450

The explainable AI market represents one of the most critical technological frontiers in artificial intelligence development, addressing the growing demand for transparency and interpretability in machine learning systems. Organizations worldwide are increasingly recognizing that black-box AI models, while powerful, create significant challenges in regulated industries, ethical decision-making, and trust-building with stakeholders. The market encompasses various technologies, tools, and methodologies designed to make AI decision-making processes more transparent and understandable to human users.

Market dynamics indicate robust growth driven by regulatory compliance requirements, ethical AI initiatives, and the need for trustworthy artificial intelligence across industries. The healthcare, financial services, and automotive sectors are leading adoption, with regulatory compliance serving as a primary catalyst. Enterprise adoption rates have accelerated significantly, with organizations implementing explainable AI solutions to meet governance requirements and build stakeholder confidence.

Technological advancement in this sector focuses on developing sophisticated algorithms that can provide clear explanations for AI-driven decisions without compromising model performance. The market includes various approaches such as model-agnostic explanation methods, interpretable machine learning models, and visualization tools that help users understand complex AI behaviors. Growth projections suggest the market will expand at a compound annual growth rate of 18.5% through the forecast period, driven by increasing regulatory scrutiny and enterprise demand for transparent AI systems.

The explainable AI market refers to the comprehensive ecosystem of technologies, platforms, and services designed to make artificial intelligence systems more transparent, interpretable, and accountable in their decision-making processes. This market encompasses solutions that enable users to understand how AI models arrive at specific conclusions, predictions, or recommendations, addressing the critical challenge of AI transparency in business and regulatory environments.

Explainable AI fundamentally transforms the relationship between humans and artificial intelligence by providing clear, understandable explanations for automated decisions. Unlike traditional black-box AI systems that operate without revealing their internal logic, explainable AI solutions offer insights into the reasoning process, feature importance, and decision pathways. This transparency is essential for building trust, ensuring compliance with regulations, and enabling human oversight of AI-driven processes.

Market scope includes various technological approaches such as post-hoc explanation methods, inherently interpretable models, visualization tools, and natural language explanation systems. The market serves diverse industries including healthcare, finance, legal services, autonomous vehicles, and government sectors where AI transparency is not just beneficial but often legally required.

The explainable AI market is experiencing unprecedented growth as organizations worldwide grapple with the need for transparent and accountable artificial intelligence systems. Regulatory pressures from frameworks such as GDPR’s “right to explanation” and emerging AI governance legislation are driving significant market expansion. The healthcare and financial services sectors represent the largest adoption segments, accounting for approximately 45% of market demand due to stringent regulatory requirements and high-stakes decision-making scenarios.

Technology innovation continues to advance rapidly, with major developments in model-agnostic explanation methods, counterfactual explanations, and interactive visualization platforms. Enterprise adoption has accelerated beyond compliance-driven implementations, with organizations recognizing the strategic value of explainable AI for improving model performance, debugging complex systems, and building stakeholder trust. Investment levels in explainable AI research and development have increased by over 150% in recent years.

Market challenges include the technical complexity of balancing model performance with interpretability, the lack of standardized explanation formats, and the need for domain-specific explanation approaches. However, these challenges are driving innovation and creating opportunities for specialized solution providers. Future growth is expected to be sustained by expanding regulatory requirements, increasing AI adoption across industries, and growing awareness of AI ethics and responsible AI practices.

Strategic market insights reveal several critical trends shaping the explainable AI landscape. Regulatory compliance remains the primary driver, with organizations investing heavily in explainable AI solutions to meet evolving legal requirements and avoid potential penalties. The market is witnessing a shift from purely compliance-driven adoption to strategic implementation for competitive advantage and operational excellence.

Regulatory compliance requirements serve as the most significant driver for explainable AI market growth. Government regulations worldwide are increasingly mandating transparency in automated decision-making systems, particularly in sectors affecting individual rights and public welfare. The European Union’s GDPR established early precedents for AI transparency, while emerging legislation in the United States, Canada, and other regions continues to expand these requirements.

Ethical AI initiatives within organizations are driving substantial investment in explainable AI technologies. Corporate responsibility programs increasingly emphasize fair and transparent AI systems as part of broader sustainability and governance strategies. Organizations recognize that explainable AI is essential for building and maintaining public trust, particularly in consumer-facing applications where AI decisions directly impact individuals.

Risk management considerations motivate organizations to implement explainable AI solutions for identifying and mitigating potential biases, errors, and unintended consequences in AI systems. Financial institutions particularly value explainable AI for managing credit risk, detecting fraud, and ensuring fair lending practices. The ability to audit and validate AI decisions provides crucial protection against regulatory penalties and reputational damage.

Competitive differentiation opportunities are emerging as organizations use explainable AI to demonstrate superior governance, transparency, and customer service. Market leaders leverage explainable AI capabilities to build stronger customer relationships, improve product quality, and establish thought leadership in responsible AI practices. This strategic positioning creates sustainable competitive advantages in increasingly AI-driven markets.

Technical complexity challenges represent significant barriers to explainable AI adoption, particularly the fundamental tension between model performance and interpretability. High-performance AI models often operate as complex black boxes that are inherently difficult to explain, while simpler, more interpretable models may sacrifice accuracy and capability. Organizations struggle to find optimal solutions that balance these competing requirements while meeting operational needs.

Implementation costs pose substantial challenges for many organizations, particularly smaller enterprises with limited AI budgets. Explainable AI solutions often require specialized expertise, additional computational resources, and extensive integration work with existing systems. The need for custom explanation approaches for different use cases and industries further increases implementation complexity and costs.

Standardization gaps create uncertainty and compatibility issues across different explainable AI platforms and approaches. Industry stakeholders lack consensus on explanation formats, evaluation metrics, and quality standards, making it difficult for organizations to compare solutions and ensure long-term compatibility. This fragmentation slows adoption and increases the risk of technology lock-in with specific vendors.

Skills shortage in explainable AI expertise limits market growth, as organizations struggle to find qualified professionals who understand both AI technology and explanation methodologies. Educational institutions are only beginning to incorporate explainable AI concepts into their curricula, creating a significant gap between market demand and available talent. This shortage drives up implementation costs and extends project timelines.

Emerging regulatory frameworks worldwide create substantial opportunities for explainable AI solution providers as governments develop comprehensive AI governance legislation. Regulatory expansion beyond current requirements will drive demand for more sophisticated explanation capabilities, creating opportunities for innovative solution providers to establish market leadership. Organizations that proactively address emerging regulatory requirements will gain significant competitive advantages.

Industry-specific solutions represent high-growth opportunities as different sectors require specialized explanation approaches tailored to their unique requirements and constraints. Healthcare applications need medical terminology and clinical reasoning explanations, while financial services require explanations focused on risk factors and regulatory compliance. This specialization creates opportunities for niche solution providers and vertical market expansion.

Integration opportunities with existing AI platforms and enterprise software systems offer significant market potential. Technology partnerships between explainable AI specialists and established AI platform providers can accelerate market penetration and reduce implementation barriers. Cloud-based explainable AI services particularly offer scalable opportunities for reaching smaller organizations and emerging markets.

Educational and training services present growing opportunities as organizations need to build internal capabilities for implementing and managing explainable AI systems. Professional development programs focusing on explainable AI concepts, implementation best practices, and regulatory compliance create additional revenue streams and market expansion opportunities for solution providers.

Market dynamics in the explainable AI sector reflect the complex interplay between technological innovation, regulatory evolution, and organizational adoption patterns. Technology advancement continues to drive market evolution, with researchers developing increasingly sophisticated methods for generating human-understandable explanations without significantly compromising model performance. Performance improvements in explanation quality have reached approximately 35% over the past two years, making explainable AI solutions more viable for production environments.

Competitive dynamics are intensifying as established AI platform providers integrate explainable AI capabilities into their offerings while specialized startups focus on innovative explanation methodologies. Market consolidation through acquisitions and partnerships is accelerating, with major technology companies acquiring explainable AI specialists to enhance their platform capabilities. This consolidation is creating more comprehensive solution offerings while potentially reducing innovation diversity.

Customer behavior patterns show a clear evolution from compliance-focused implementations to strategic deployments that leverage explainable AI for business optimization and competitive advantage. Enterprise buyers increasingly evaluate explainable AI solutions based on their ability to improve decision-making quality, reduce operational risks, and enhance customer trust rather than simply meeting regulatory requirements.

Supply chain dynamics are evolving as cloud-based delivery models become dominant, reducing implementation barriers and enabling faster time-to-value for customers. Service delivery models are shifting toward subscription-based offerings that include ongoing support, updates, and compliance monitoring, creating more predictable revenue streams for providers and lower upfront costs for customers.

Comprehensive research methodology employed in analyzing the explainable AI market combines quantitative data analysis with qualitative insights from industry experts, technology providers, and end-user organizations. Primary research activities include structured interviews with C-level executives, AI practitioners, and regulatory experts across key vertical markets to understand adoption patterns, implementation challenges, and future requirements.

Secondary research sources encompass academic publications, patent filings, regulatory documents, and industry reports to establish technological trends and competitive landscapes. Market sizing methodologies utilize bottom-up analysis based on adoption rates, implementation costs, and market penetration across different industry segments and geographic regions. Data validation processes include cross-referencing multiple sources and expert review to ensure accuracy and reliability.

Analytical frameworks incorporate Porter’s Five Forces analysis, SWOT assessment, and technology adoption lifecycle models to provide comprehensive market insights. Forecasting methodologies combine historical trend analysis with scenario modeling to account for regulatory changes, technological breakthroughs, and market disruptions. Quality assurance processes ensure data integrity and analytical rigor throughout the research process.

Geographic coverage includes detailed analysis of North American, European, and Asia-Pacific markets, with additional insights from emerging markets in Latin America and the Middle East. Industry segmentation covers healthcare, financial services, automotive, government, retail, and manufacturing sectors to provide comprehensive market understanding and identify sector-specific opportunities and challenges.

North American markets lead global explainable AI adoption, driven by strong regulatory frameworks, advanced AI infrastructure, and significant enterprise investment in AI governance. United States organizations account for approximately 42% of global market activity, with particular strength in healthcare, financial services, and technology sectors. Canadian markets show strong growth in government and healthcare applications, supported by national AI strategy initiatives and privacy regulations.

European markets demonstrate the highest regulatory-driven demand, with GDPR and emerging AI Act requirements creating substantial market opportunities. Germany and France lead European adoption, particularly in automotive and manufacturing applications where explainable AI supports safety certification and quality assurance processes. Nordic countries show strong government sector adoption, leveraging explainable AI for transparent public service delivery and citizen engagement.

Asia-Pacific regions represent the fastest-growing markets, with growth rates exceeding 25% annually driven by rapid AI adoption and increasing regulatory attention to AI transparency. Japan and South Korea lead in automotive and electronics applications, while Singapore and Australia focus on financial services and government implementations. China’s market shows strong growth in healthcare and smart city applications, supported by government initiatives promoting responsible AI development.

Emerging markets in Latin America, Middle East, and Africa show increasing interest in explainable AI, particularly for government transparency and financial inclusion applications. Brazil and Mexico lead Latin American adoption, while UAE and Saudi Arabia drive Middle Eastern market growth through smart city and digital government initiatives. These markets present significant long-term opportunities as AI adoption accelerates and regulatory frameworks mature.

The competitive landscape in the explainable AI market features a diverse ecosystem of established technology giants, specialized AI companies, and emerging startups, each bringing unique capabilities and market approaches. Market competition intensifies as organizations seek comprehensive solutions that balance explanation quality, performance impact, and implementation complexity.

Competitive strategies vary significantly across market participants, with established technology companies leveraging platform integration advantages while specialized providers focus on superior explanation quality and industry expertise. Partnership strategies are becoming increasingly important as organizations seek comprehensive solutions that combine multiple capabilities and expertise areas.

Market segmentation analysis reveals distinct patterns across technology types, deployment models, industry verticals, and organizational sizes. Technology segmentation includes model-agnostic explanation methods, inherently interpretable models, and hybrid approaches that balance performance with transparency requirements. Each segment serves different use cases and organizational needs, creating diverse market opportunities.

By Technology Type:

By Deployment Model:

Healthcare applications represent the largest and most mature segment of the explainable AI market, driven by critical patient safety requirements and stringent regulatory oversight. Medical AI systems require transparent decision-making processes to enable physician oversight, support clinical validation, and meet regulatory approval requirements. Diagnostic AI applications particularly benefit from explainable AI capabilities that highlight relevant medical features and provide clinical reasoning pathways.

Financial services implementations focus primarily on credit decisioning, fraud detection, and risk management applications where regulatory compliance and customer transparency are essential. Banking institutions leverage explainable AI to demonstrate fair lending practices, explain credit decisions to customers, and support regulatory examinations. Insurance companies use explainable AI for transparent claims processing and risk assessment, improving customer satisfaction and regulatory compliance.

Automotive sector adoption centers on autonomous vehicle development and safety certification, where explainable AI provides crucial insights into vehicle decision-making processes. Safety-critical systems require transparent reasoning capabilities to support regulatory approval and public acceptance of autonomous vehicles. Manufacturing quality control applications use explainable AI to identify defect patterns and optimize production processes.

Government and public sector implementations emphasize transparency, accountability, and citizen trust in automated decision-making systems. Public service applications require explainable AI to ensure fair treatment of citizens and support democratic oversight of government AI systems. Law enforcement applications use explainable AI to provide transparent analysis while maintaining operational effectiveness and constitutional compliance.

Regulatory compliance benefits provide immediate value for organizations operating in regulated industries, enabling them to meet transparency requirements while maintaining AI system performance. Compliance automation reduces the manual effort required for regulatory reporting and audit preparation, while risk mitigation capabilities help organizations avoid penalties and reputational damage from non-compliant AI systems.

Operational efficiency improvements emerge as organizations use explainable AI insights to optimize model performance, identify training data issues, and debug complex AI systems. Model debugging capabilities enable data scientists to quickly identify and resolve performance issues, while feature importance analysis helps optimize data collection and preprocessing efforts. Quality assurance processes benefit from transparent AI decision-making that enables systematic validation and testing.

Stakeholder trust building represents a critical strategic benefit as organizations demonstrate commitment to responsible AI practices and transparent decision-making. Customer confidence increases when organizations can explain AI-driven decisions affecting individual customers, while investor relations improve through demonstrated AI governance and risk management capabilities. Employee acceptance of AI systems increases when workers understand how AI tools support rather than replace human decision-making.

Competitive differentiation opportunities arise as organizations leverage explainable AI capabilities to demonstrate superior governance, customer service, and product quality. Market positioning benefits from thought leadership in responsible AI practices, while customer acquisition improves through transparent and trustworthy AI-powered services. Partnership opportunities expand as organizations with strong explainable AI capabilities become preferred collaborators for AI-driven initiatives.

Strengths:

Weaknesses:

Opportunities:

Threats:

Automated explanation generation represents a major trend as organizations seek to reduce the manual effort required for creating and maintaining AI explanations. Natural language generation capabilities are advancing rapidly, enabling AI systems to produce human-readable explanations automatically. This trend reduces implementation costs and improves scalability while maintaining explanation quality and consistency.

Domain-specific explanation approaches are gaining prominence as organizations recognize that generic explanation methods may not address industry-specific requirements and user needs. Healthcare explanations emphasize clinical reasoning and medical terminology, while financial explanations focus on risk factors and regulatory compliance requirements. This specialization trend creates opportunities for vertical market solutions and industry expertise.

Real-time explanation capabilities are becoming increasingly important as organizations deploy AI systems in time-sensitive applications where immediate transparency is required. Edge computing integration enables explanation generation at the point of decision-making, reducing latency and improving user experience. Streaming explanation services provide continuous transparency for dynamic AI systems that adapt and learn over time.

Interactive explanation interfaces are evolving to support more sophisticated user interactions with AI explanations, enabling stakeholders to explore different scenarios and understand AI behavior patterns. Conversational explanation systems allow users to ask follow-up questions and receive clarifications, while visual explanation tools provide intuitive representations of complex AI decision processes. User experience optimization focuses on making explanations accessible to non-technical stakeholders while maintaining technical accuracy.

Regulatory milestone achievements continue to shape the explainable AI market landscape, with the European Union’s AI Act establishing comprehensive requirements for high-risk AI systems transparency. MarkWide Research analysis indicates that regulatory compliance requirements now influence over 70% of explainable AI adoption decisions in regulated industries. Government initiatives in the United States, Canada, and other regions are developing similar frameworks that will expand market opportunities.

Technology breakthrough announcements from major AI research organizations demonstrate significant advances in explanation quality and computational efficiency. Academic collaborations between universities and industry partners are producing practical solutions that bridge the gap between research innovations and commercial applications. Open-source initiatives are accelerating technology adoption by providing accessible tools and frameworks for explainable AI implementation.

Strategic partnership formations between explainable AI specialists and established technology platforms are creating more comprehensive solution offerings. Acquisition activities by major cloud providers and enterprise software companies indicate strong market confidence and consolidation trends. Investment rounds in explainable AI startups have increased substantially, with funding levels growing by over 180% compared to previous years.

Industry standard development efforts are progressing through collaborative initiatives involving technology providers, academic institutions, and regulatory bodies. Certification programs for explainable AI professionals are emerging to address skills shortages and establish competency standards. Best practice guidelines from industry associations provide implementation frameworks that reduce deployment risks and improve success rates.

Strategic implementation recommendations emphasize the importance of aligning explainable AI initiatives with broader organizational AI governance and risk management strategies. Organizations should prioritize use cases where transparency requirements are most critical, such as regulatory compliance applications and customer-facing decision systems. Phased deployment approaches enable organizations to build expertise and demonstrate value before expanding to more complex applications.

Technology selection criteria should balance explanation quality, performance impact, and integration complexity based on specific organizational requirements and constraints. Proof-of-concept projects provide valuable insights into solution effectiveness and implementation challenges before committing to large-scale deployments. Vendor evaluation processes should include assessment of long-term viability, support capabilities, and regulatory compliance features.

Skills development investments are essential for successful explainable AI implementation, requiring training programs for both technical and business stakeholders. Cross-functional teams combining AI expertise, domain knowledge, and regulatory understanding provide the best foundation for successful projects. Change management strategies should address user adoption challenges and ensure effective integration with existing workflows and decision processes.

Partnership strategies can accelerate implementation and reduce risks by leveraging specialized expertise and proven solutions. System integrator relationships provide implementation support and ongoing maintenance capabilities, while technology partnerships ensure access to latest innovations and best practices. Regulatory consulting support helps organizations navigate complex compliance requirements and avoid implementation pitfalls.

Market evolution trajectories indicate continued strong growth driven by expanding regulatory requirements, increasing AI adoption, and growing awareness of responsible AI practices. Technology advancement will focus on improving explanation quality while minimizing performance impact, with particular emphasis on automated explanation generation and domain-specific approaches. Market maturation will bring standardization, improved interoperability, and more accessible solutions for smaller organizations.

Regulatory landscape development will significantly influence market dynamics as governments worldwide implement comprehensive AI governance frameworks. Compliance requirements are expected to expand beyond current high-risk applications to include broader categories of AI systems affecting individual rights and public welfare. International coordination efforts may lead to harmonized standards that facilitate global market expansion and technology adoption.

Technology integration trends will see explainable AI capabilities becoming standard features of AI platforms and enterprise software systems rather than separate solutions. Cloud-native architectures will dominate delivery models, providing scalable and cost-effective access to explainable AI capabilities. Edge computing integration will enable real-time explanation generation for time-sensitive applications and privacy-sensitive use cases.

Market growth projections suggest sustained expansion with compound annual growth rates exceeding 20% through the next five years. Geographic expansion will accelerate as emerging markets develop AI governance frameworks and increase enterprise AI adoption. Industry diversification will bring explainable AI applications to new sectors including education, agriculture, and environmental management, creating additional growth opportunities and market segments.

The explainable AI market stands at a critical juncture where regulatory requirements, technological advancement, and organizational recognition of AI transparency importance converge to create substantial growth opportunities. Market dynamics indicate sustained expansion driven by compliance needs, ethical AI initiatives, and competitive differentiation strategies across diverse industry sectors.

Technology evolution continues to address fundamental challenges of balancing model performance with interpretability, while emerging solutions provide increasingly sophisticated explanation capabilities. Regulatory frameworks worldwide are establishing comprehensive requirements that will drive long-term market demand and create opportunities for innovative solution providers. Industry adoption patterns demonstrate clear progression from compliance-focused implementations to strategic deployments that leverage explainable AI for operational excellence and competitive advantage.

Future success in the explainable AI market will depend on organizations’ ability to deliver practical solutions that address real-world transparency requirements while maintaining AI system performance and usability. Market participants who focus on industry specialization, technology integration, and user experience optimization will be best positioned to capitalize on emerging opportunities and navigate evolving challenges in this dynamic and rapidly expanding market segment.

What is Explainable AI?

Explainable AI refers to artificial intelligence systems that provide transparent and understandable insights into their decision-making processes. This is crucial in applications such as healthcare, finance, and autonomous vehicles, where understanding AI decisions can significantly impact outcomes.

What are the key players in the Explainable AI Market?

Key players in the Explainable AI Market include IBM, Google, Microsoft, and H2O.ai, among others. These companies are at the forefront of developing technologies that enhance the interpretability of AI models across various sectors.

What are the main drivers of growth in the Explainable AI Market?

The growth of the Explainable AI Market is driven by increasing regulatory requirements for transparency in AI, the rising demand for ethical AI solutions, and the need for improved trust in AI systems across industries such as finance and healthcare.

What challenges does the Explainable AI Market face?

Challenges in the Explainable AI Market include the complexity of creating models that are both accurate and interpretable, as well as the potential for oversimplification of AI decisions. Additionally, there is a lack of standardized metrics for evaluating explainability.

What opportunities exist in the Explainable AI Market?

Opportunities in the Explainable AI Market include the development of new frameworks for model interpretability, integration of explainable AI in emerging technologies like edge computing, and the potential for enhanced user trust in AI applications across various sectors.

What trends are shaping the Explainable AI Market?

Trends in the Explainable AI Market include the increasing adoption of AI ethics guidelines, advancements in natural language processing for better explanations, and the growing focus on user-centric design in AI systems to enhance interpretability.

Explainable AI Market

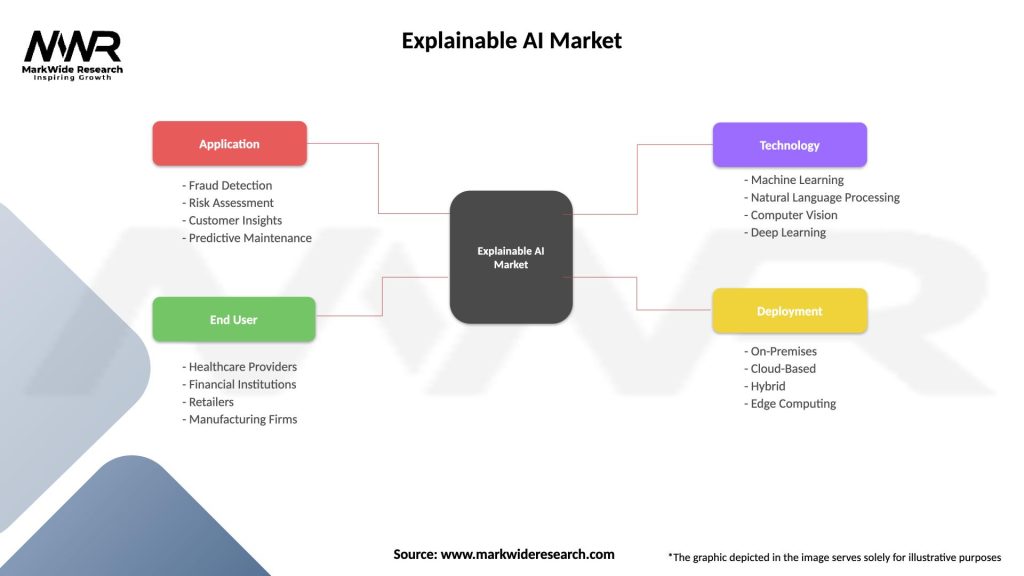

| Segmentation Details | Description |

|---|---|

| Application | Fraud Detection, Risk Assessment, Customer Insights, Predictive Maintenance |

| End User | Healthcare Providers, Financial Institutions, Retailers, Manufacturing Firms |

| Technology | Machine Learning, Natural Language Processing, Computer Vision, Deep Learning |

| Deployment | On-Premises, Cloud-Based, Hybrid, Edge Computing |

Please note: The segmentation can be entirely customized to align with our client’s needs.

Leading companies in the Explainable AI Market

Please note: This is a preliminary list; the final study will feature 18–20 leading companies in this market. The selection of companies in the final report can be customized based on our client’s specific requirements.

North America

o US

o Canada

o Mexico

Europe

o Germany

o Italy

o France

o UK

o Spain

o Denmark

o Sweden

o Austria

o Belgium

o Finland

o Turkey

o Poland

o Russia

o Greece

o Switzerland

o Netherlands

o Norway

o Portugal

o Rest of Europe

Asia Pacific

o China

o Japan

o India

o South Korea

o Indonesia

o Malaysia

o Kazakhstan

o Taiwan

o Vietnam

o Thailand

o Philippines

o Singapore

o Australia

o New Zealand

o Rest of Asia Pacific

South America

o Brazil

o Argentina

o Colombia

o Chile

o Peru

o Rest of South America

The Middle East & Africa

o Saudi Arabia

o UAE

o Qatar

o South Africa

o Israel

o Kuwait

o Oman

o North Africa

o West Africa

o Rest of MEA

Trusted by Global Leaders

Fortune 500 companies, SMEs, and top institutions rely on MWR’s insights to make informed decisions and drive growth.

ISO & IAF Certified

Our certifications reflect a commitment to accuracy, reliability, and high-quality market intelligence trusted worldwide.

Customized Insights

Every report is tailored to your business, offering actionable recommendations to boost growth and competitiveness.

Multi-Language Support

Final reports are delivered in English and major global languages including French, German, Spanish, Italian, Portuguese, Chinese, Japanese, Korean, Arabic, Russian, and more.

Unlimited User Access

Corporate License offers unrestricted access for your entire organization at no extra cost.

Free Company Inclusion

We add 3–4 extra companies of your choice for more relevant competitive analysis — free of charge.

Post-Sale Assistance

Dedicated account managers provide unlimited support, handling queries and customization even after delivery.

GET A FREE SAMPLE REPORT

This free sample study provides a complete overview of the report, including executive summary, market segments, competitive analysis, country level analysis and more.

ISO AND IAF CERTIFIED

GET A FREE SAMPLE REPORT

This free sample study provides a complete overview of the report, including executive summary, market segments, competitive analysis, country level analysis and more.

ISO AND IAF CERTIFIED

Suite #BAA205 Torrance, CA 90503 USA

24/7 Customer Support

Email us at