444 Alaska Avenue

Suite #BAA205 Torrance, CA 90503 USA

+1 424 999 9627

24/7 Customer Support

sales@markwideresearch.com

Email us at

Suite #BAA205 Torrance, CA 90503 USA

24/7 Customer Support

Email us at

Corporate User License

Unlimited User Access, Post-Sale Support, Free Updates, Reports in English & Major Languages, and more

$3450

Market Overview

The Content Moderation Software market encompasses a range of technologies and solutions designed to monitor, analyze, and manage digital content across various platforms and channels. It plays a crucial role in ensuring compliance, safety, and user experience by identifying and mitigating harmful or inappropriate content.

Meaning

Content Moderation Software refers to advanced tools and algorithms that automate the process of reviewing user-generated content (UGC), including text, images, videos, and audio, to enforce community guidelines, prevent abuse, and uphold platform standards. These solutions utilize AI, machine learning, natural language processing (NLP), and computer vision to analyze and categorize content based on predefined criteria.

Executive Summary

The Content Moderation Software market is experiencing rapid growth driven by the proliferation of online platforms, increasing concerns over digital safety, and regulatory requirements. Key market players focus on developing scalable and efficient solutions that cater to diverse industries, including social media, e-commerce, gaming, and online marketplaces.

Important Note: The companies listed in the image above are for reference only. The final study will cover 18–20 key players in this market, and the list can be adjusted based on our client’s requirements.

Key Market Insights

Market Drivers

Market Restraints

Market Opportunities

Market Dynamics

The Content Moderation Software market is characterized by evolving technologies, regulatory pressures, and changing consumer behaviors. Key trends include the integration of AI-driven automation, real-time content monitoring, and proactive moderation strategies to maintain platform integrity and user satisfaction.

Regional Analysis

Competitive Landscape

Leading Companies in the Content Moderation Software Market

Please note: This is a preliminary list; the final study will feature 18–20 leading companies in this market. The selection of companies in the final report can be customized based on our client’s specific requirements.

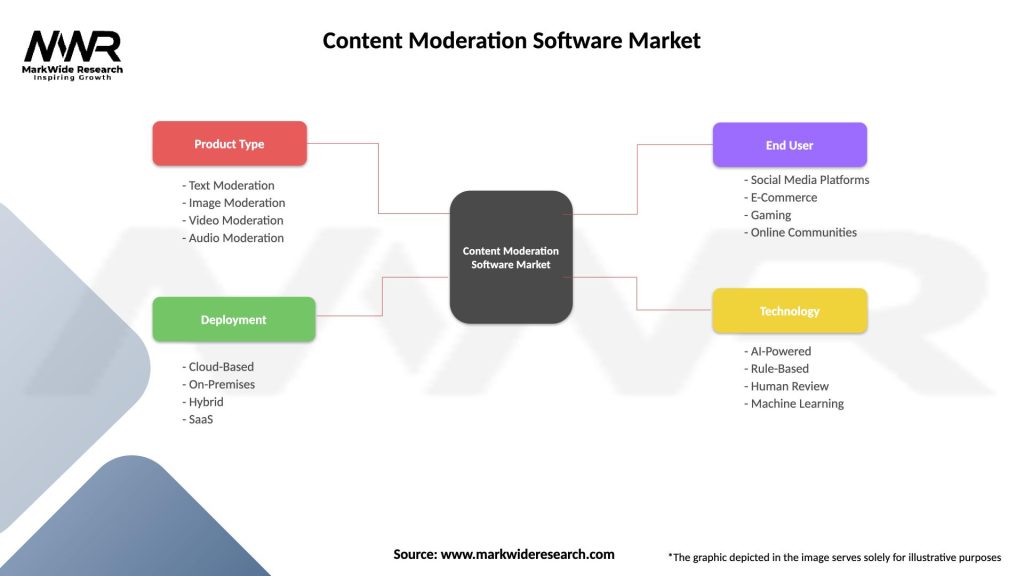

Segmentation

The Content Moderation Software market can be segmented based on deployment mode (cloud-based, on-premises), application (social media, e-commerce, gaming, online marketplaces), moderation type (text, image, video, audio), and geography (North America, Europe, Asia-Pacific, Latin America, Middle East & Africa).

Category-wise Insights

Key Benefits for Industry Participants and Stakeholders

SWOT Analysis

Strengths: AI-driven automation, real-time monitoring, and scalability enhance content moderation efficiency.

Weaknesses: Challenges in AI model accuracy, privacy concerns, and high implementation costs.

Opportunities: Advancements in AI technology, industry-specific solutions, and global expansion of digital platforms.

Threats: Regulatory complexities, competition from alternative moderation approaches, and evolving consumer expectations.

Market Key Trends

Covid-19 Impact

Key Industry Developments

Analyst Suggestions

Future Outlook

The future outlook for the Content Moderation Software market remains optimistic, driven by technological advancements, regulatory developments, and increasing digitalization. Organizations that invest in AI-driven automation, regulatory compliance, and customer-centric moderation strategies are well-positioned to capitalize on growing market opportunities and sustain competitive advantage.

Conclusion

In conclusion, the Content Moderation Software market plays a pivotal role in maintaining digital safety, regulatory compliance, and user trust across online platforms. With advancements in AI technology, increasing regulatory scrutiny, and evolving consumer expectations, effective content moderation solutions are essential for businesses to mitigate risks, enhance operational efficiency, and foster a safe digital ecosystem. As the market evolves, stakeholders must adapt to emerging trends, innovate their offerings, and prioritize ethical content moderation practices to drive sustainable growth and address evolving industry challenges.

What is Content Moderation Software?

Content Moderation Software refers to tools and platforms designed to monitor, filter, and manage user-generated content on digital platforms. These solutions help ensure compliance with community guidelines and legal regulations by identifying inappropriate or harmful content.

What are the key players in the Content Moderation Software Market?

Key players in the Content Moderation Software Market include companies like Microsoft, Google, and Amazon Web Services, which provide advanced moderation tools and AI-driven solutions to enhance content safety and user experience, among others.

What are the main drivers of growth in the Content Moderation Software Market?

The main drivers of growth in the Content Moderation Software Market include the increasing need for online safety, the rise of social media platforms, and the growing regulatory pressures on content management. These factors push businesses to adopt effective moderation solutions.

What challenges does the Content Moderation Software Market face?

The Content Moderation Software Market faces challenges such as the difficulty in accurately identifying contextually inappropriate content, the potential for bias in AI algorithms, and the need for continuous updates to keep up with evolving user behavior and regulations.

What opportunities exist in the Content Moderation Software Market?

Opportunities in the Content Moderation Software Market include the development of more sophisticated AI technologies, the expansion of services to new industries like gaming and e-commerce, and the increasing demand for multilingual moderation solutions to cater to diverse user bases.

What trends are shaping the Content Moderation Software Market?

Trends shaping the Content Moderation Software Market include the integration of machine learning for improved accuracy, the use of real-time moderation tools, and a growing emphasis on user privacy and ethical considerations in content management.

Content Moderation Software Market

| Segmentation Details | Description |

|---|---|

| Product Type | Text Moderation, Image Moderation, Video Moderation, Audio Moderation |

| Deployment | Cloud-Based, On-Premises, Hybrid, SaaS |

| End User | Social Media Platforms, E-Commerce, Gaming, Online Communities |

| Technology | AI-Powered, Rule-Based, Human Review, Machine Learning |

Please note: The segmentation can be entirely customized to align with our client’s needs.

Leading Companies in the Content Moderation Software Market

Please note: This is a preliminary list; the final study will feature 18–20 leading companies in this market. The selection of companies in the final report can be customized based on our client’s specific requirements.

North America

o US

o Canada

o Mexico

Europe

o Germany

o Italy

o France

o UK

o Spain

o Denmark

o Sweden

o Austria

o Belgium

o Finland

o Turkey

o Poland

o Russia

o Greece

o Switzerland

o Netherlands

o Norway

o Portugal

o Rest of Europe

Asia Pacific

o China

o Japan

o India

o South Korea

o Indonesia

o Malaysia

o Kazakhstan

o Taiwan

o Vietnam

o Thailand

o Philippines

o Singapore

o Australia

o New Zealand

o Rest of Asia Pacific

South America

o Brazil

o Argentina

o Colombia

o Chile

o Peru

o Rest of South America

The Middle East & Africa

o Saudi Arabia

o UAE

o Qatar

o South Africa

o Israel

o Kuwait

o Oman

o North Africa

o West Africa

o Rest of MEA

Trusted by Global Leaders

Fortune 500 companies, SMEs, and top institutions rely on MWR’s insights to make informed decisions and drive growth.

ISO & IAF Certified

Our certifications reflect a commitment to accuracy, reliability, and high-quality market intelligence trusted worldwide.

Customized Insights

Every report is tailored to your business, offering actionable recommendations to boost growth and competitiveness.

Multi-Language Support

Final reports are delivered in English and major global languages including French, German, Spanish, Italian, Portuguese, Chinese, Japanese, Korean, Arabic, Russian, and more.

Unlimited User Access

Corporate License offers unrestricted access for your entire organization at no extra cost.

Free Company Inclusion

We add 3–4 extra companies of your choice for more relevant competitive analysis — free of charge.

Post-Sale Assistance

Dedicated account managers provide unlimited support, handling queries and customization even after delivery.

GET A FREE SAMPLE REPORT

This free sample study provides a complete overview of the report, including executive summary, market segments, competitive analysis, country level analysis and more.

ISO AND IAF CERTIFIED

GET A FREE SAMPLE REPORT

This free sample study provides a complete overview of the report, including executive summary, market segments, competitive analysis, country level analysis and more.

ISO AND IAF CERTIFIED

Suite #BAA205 Torrance, CA 90503 USA

24/7 Customer Support

Email us at