444 Alaska Avenue

Suite #BAA205 Torrance, CA 90503 USA

+1 424 999 9627

24/7 Customer Support

sales@markwideresearch.com

Email us at

Suite #BAA205 Torrance, CA 90503 USA

24/7 Customer Support

Email us at

Corporate User License

Unlimited User Access, Post-Sale Support, Free Updates, Reports in English & Major Languages, and more

$3450

The AI infrastructure solutions market represents a rapidly evolving landscape that encompasses the fundamental hardware, software, and networking components required to support artificial intelligence applications across diverse industries. This comprehensive ecosystem includes specialized processors, high-performance computing systems, cloud platforms, data storage solutions, and networking infrastructure designed to handle the intensive computational demands of machine learning and deep learning workloads.

Market dynamics indicate unprecedented growth driven by the increasing adoption of AI technologies across sectors including healthcare, automotive, financial services, and manufacturing. The infrastructure supporting these AI implementations requires sophisticated hardware architectures, including graphics processing units (GPUs), tensor processing units (TPUs), field-programmable gate arrays (FPGAs), and application-specific integrated circuits (ASICs). These components work in conjunction with advanced software frameworks and cloud-native platforms to deliver scalable AI capabilities.

Enterprise adoption of AI infrastructure solutions has accelerated significantly, with organizations recognizing the strategic importance of robust computational foundations for their digital transformation initiatives. The market encompasses both on-premises infrastructure deployments and cloud-based solutions, with hybrid approaches gaining considerable traction. MarkWide Research analysis indicates that enterprise AI workloads are growing at approximately 42% annually, driving substantial demand for specialized infrastructure components.

Technological advancement in AI infrastructure continues to push the boundaries of computational efficiency and performance. Edge computing integration, quantum computing research, and neuromorphic chip development represent emerging frontiers that are reshaping the infrastructure landscape. The convergence of 5G networks, Internet of Things (IoT) devices, and AI processing capabilities is creating new opportunities for distributed AI infrastructure deployments.

The AI infrastructure solutions market refers to the comprehensive ecosystem of hardware, software, and services that provide the foundational computational resources necessary for developing, training, deploying, and managing artificial intelligence applications at scale. This market encompasses specialized computing hardware, storage systems, networking solutions, and software platforms specifically designed to handle the unique requirements of AI workloads.

Core components of AI infrastructure include high-performance processors optimized for parallel computing, distributed storage systems capable of handling massive datasets, high-bandwidth networking infrastructure for data transfer, and specialized software frameworks that orchestrate AI workflows. These elements work together to create environments where machine learning models can be trained efficiently and AI applications can operate with optimal performance.

Infrastructure categories span from edge devices that bring AI processing closer to data sources, to massive data center installations that support large-scale AI training operations. The market also includes cloud-based AI infrastructure services that provide on-demand access to computational resources, enabling organizations to scale their AI initiatives without significant upfront capital investments.

Strategic positioning of the AI infrastructure solutions market reflects its critical role as the backbone of the global artificial intelligence revolution. Organizations across industries are investing heavily in infrastructure capabilities that can support increasingly sophisticated AI applications, from natural language processing and computer vision to autonomous systems and predictive analytics.

Market segmentation reveals diverse deployment models including public cloud, private cloud, hybrid cloud, and on-premises solutions, each serving specific organizational needs and compliance requirements. The hardware segment encompasses processors, memory systems, storage solutions, and networking equipment, while the software segment includes AI frameworks, orchestration platforms, and management tools.

Growth drivers include the exponential increase in data generation, the need for real-time AI processing capabilities, and the democratization of AI technologies across small and medium enterprises. Edge AI deployment is experiencing particularly strong growth, with adoption rates increasing by approximately 38% annually as organizations seek to reduce latency and improve data privacy.

Competitive dynamics feature established technology giants alongside innovative startups, creating a vibrant ecosystem of solution providers. The market is characterized by rapid technological evolution, strategic partnerships, and significant research and development investments focused on next-generation AI processing capabilities.

Fundamental insights into the AI infrastructure solutions market reveal several critical trends shaping its evolution:

Market maturation is evident in the standardization of AI infrastructure components and the emergence of reference architectures that guide enterprise deployments. The integration of artificial intelligence into the infrastructure management layer itself is creating self-optimizing systems that can adapt to changing workload demands automatically.

Primary drivers propelling the AI infrastructure solutions market forward include the exponential growth in data generation and the increasing sophistication of AI applications requiring more powerful computational resources. Organizations are recognizing that traditional IT infrastructure cannot adequately support the parallel processing demands of machine learning algorithms and deep neural networks.

Digital transformation initiatives across industries are creating unprecedented demand for AI capabilities, driving organizations to invest in infrastructure that can support both current and future AI workloads. The competitive advantage gained through AI implementation is motivating enterprises to prioritize infrastructure investments that enable faster model training, more accurate predictions, and real-time decision-making capabilities.

Technological advancement in AI algorithms is simultaneously driving infrastructure requirements and enabling more efficient processing architectures. The development of transformer models, generative AI applications, and large language models requires infrastructure solutions capable of handling massive parameter spaces and complex computational graphs.

Regulatory compliance and data sovereignty requirements are driving demand for on-premises and private cloud AI infrastructure solutions, particularly in highly regulated industries such as healthcare, finance, and government. Organizations need infrastructure that provides complete control over data processing while maintaining the performance characteristics required for AI applications.

Cost optimization pressures are encouraging organizations to invest in more efficient AI infrastructure solutions that can deliver better performance per dollar spent. The total cost of ownership considerations include not only hardware acquisition costs but also energy consumption, cooling requirements, and operational management expenses.

Significant barriers to AI infrastructure adoption include the substantial capital investment requirements for high-performance computing systems and the complexity of designing and implementing AI-optimized infrastructure architectures. Many organizations struggle with the technical expertise required to properly configure and manage sophisticated AI infrastructure environments.

Skills shortage in AI infrastructure management represents a critical constraint, with organizations finding it difficult to recruit and retain professionals who understand both AI workload characteristics and infrastructure optimization techniques. This talent gap is particularly acute in specialized areas such as distributed computing, high-performance networking, and AI-specific hardware configuration.

Energy consumption and environmental concerns associated with AI infrastructure operations are creating challenges for organizations committed to sustainability goals. The power requirements for training large AI models and supporting inference workloads at scale can be substantial, leading to increased operational costs and environmental impact considerations.

Technology obsolescence risks concern organizations making significant infrastructure investments, as the rapid pace of AI hardware and software evolution can quickly render current solutions outdated. The challenge of balancing cutting-edge performance with investment longevity requires careful strategic planning and flexible architecture design.

Integration complexity with existing IT systems and workflows can create deployment challenges, particularly for organizations with legacy infrastructure investments. The need to maintain operational continuity while implementing AI infrastructure upgrades requires sophisticated change management and technical integration capabilities.

Emerging opportunities in the AI infrastructure solutions market are driven by the expansion of AI applications into new industries and use cases, creating demand for specialized infrastructure solutions tailored to specific vertical requirements. The healthcare sector, for example, presents significant opportunities for AI infrastructure optimized for medical imaging, drug discovery, and patient monitoring applications.

Edge AI deployment represents a substantial growth opportunity as organizations seek to bring AI processing capabilities closer to data sources and end users. This trend is creating demand for compact, power-efficient AI infrastructure solutions that can operate in distributed environments while maintaining connectivity to centralized management systems.

Quantum computing integration presents long-term opportunities for AI infrastructure providers to develop hybrid classical-quantum systems that can leverage quantum advantages for specific AI algorithms while maintaining compatibility with existing infrastructure investments. Early research and development in this area is positioning companies for future market leadership.

Sustainability initiatives are creating opportunities for energy-efficient AI infrastructure solutions that can deliver high performance while minimizing environmental impact. Green AI infrastructure technologies, including advanced cooling systems, renewable energy integration, and power-efficient processors, are becoming increasingly important to environmentally conscious organizations.

Small and medium enterprise adoption of AI technologies is creating opportunities for simplified, cost-effective infrastructure solutions that can democratize access to AI capabilities. Cloud-based AI infrastructure services and turnkey solutions are making it possible for smaller organizations to implement AI applications without significant upfront investments.

Dynamic forces shaping the AI infrastructure solutions market include the continuous evolution of AI algorithms and the corresponding need for more sophisticated computational architectures. The interplay between hardware innovation and software optimization is driving rapid advancement in infrastructure capabilities and efficiency.

Competitive pressures are intensifying as traditional IT infrastructure providers compete with specialized AI hardware companies and cloud service providers. This competition is accelerating innovation and driving down costs while simultaneously pushing the boundaries of performance and capability.

Supply chain considerations have become increasingly important as organizations recognize the strategic importance of reliable access to AI infrastructure components. Geopolitical factors, semiconductor manufacturing capacity, and raw material availability all influence market dynamics and pricing structures.

Standards development and industry collaboration are helping to establish common frameworks and interfaces that enable greater interoperability between AI infrastructure components from different vendors. These standardization efforts are reducing deployment complexity and enabling more flexible, multi-vendor solutions.

Investment patterns show increasing focus on infrastructure solutions that can support multiple AI workload types and adapt to changing requirements over time. Organizations are prioritizing flexible, scalable architectures that can evolve with their AI maturity and changing business needs.

Comprehensive analysis of the AI infrastructure solutions market employs multiple research methodologies to ensure accuracy and completeness of market insights. Primary research includes extensive interviews with industry executives, technology leaders, and end-users across diverse sectors to understand current challenges, requirements, and future plans.

Secondary research encompasses analysis of industry reports, technical publications, patent filings, and regulatory documents to identify trends, technological developments, and market dynamics. This research approach provides a foundation for understanding the broader context of AI infrastructure evolution and adoption patterns.

Market modeling techniques incorporate quantitative analysis of deployment patterns, technology adoption rates, and investment flows to project future market development. These models account for various scenarios including accelerated AI adoption, economic fluctuations, and technological breakthrough impacts.

Technology assessment involves detailed evaluation of emerging AI infrastructure technologies, including performance benchmarking, cost analysis, and scalability evaluation. This technical analysis helps identify which technologies are likely to gain market traction and influence future infrastructure requirements.

Validation processes ensure research findings are accurate and representative through cross-referencing multiple data sources, expert review, and market feedback. This rigorous approach maintains the reliability and credibility of market analysis and projections.

North American markets lead global AI infrastructure adoption, driven by the concentration of technology companies, research institutions, and early-adopting enterprises. The region accounts for approximately 45% of global AI infrastructure deployments, with particularly strong growth in cloud-based solutions and edge computing implementations.

European markets demonstrate strong growth in AI infrastructure investments, with emphasis on data sovereignty, privacy compliance, and sustainable computing solutions. The region’s focus on regulatory compliance is driving demand for on-premises and private cloud AI infrastructure solutions that provide complete data control.

Asia-Pacific regions show the fastest growth rates in AI infrastructure adoption, with countries like China, Japan, and South Korea making substantial investments in AI capabilities across manufacturing, telecommunications, and smart city initiatives. The region’s growth rate of approximately 52% annually reflects aggressive AI adoption strategies and government support for AI development.

Emerging markets in Latin America, Africa, and Southeast Asia are beginning to invest in AI infrastructure as costs decrease and cloud-based solutions become more accessible. These markets often favor cloud-first approaches that minimize upfront capital requirements while providing access to advanced AI capabilities.

Regional specialization is emerging as different geographic areas focus on specific AI infrastructure strengths, such as edge computing in Asia-Pacific, privacy-focused solutions in Europe, and high-performance computing in North America. This specialization is creating opportunities for regional solution providers while driving global technology exchange.

Market leadership in AI infrastructure solutions is distributed among several categories of providers, each bringing unique strengths and capabilities to the market:

Competitive strategies focus on vertical integration, strategic partnerships, and continuous innovation in both hardware and software components. Companies are investing heavily in research and development to maintain technological leadership while also building comprehensive ecosystems that simplify AI infrastructure deployment and management.

Market consolidation trends include acquisitions of specialized AI infrastructure companies by larger technology providers, creating more comprehensive solution portfolios and accelerating technology development cycles.

Technology segmentation of the AI infrastructure solutions market reveals distinct categories based on computational approaches and deployment architectures:

By Hardware Type:

By Deployment Model:

By Application Vertical:

Hardware infrastructure represents the largest segment of the AI infrastructure solutions market, with GPU-based systems maintaining dominance due to their parallel processing capabilities and mature software ecosystem. The segment is experiencing rapid innovation with new architectures optimized for specific AI workloads and improved energy efficiency.

Software infrastructure is growing rapidly as organizations recognize the importance of orchestration, management, and optimization tools for AI workloads. Container orchestration platforms, AI-specific operating systems, and automated deployment tools are becoming essential components of comprehensive AI infrastructure solutions.

Cloud-based solutions continue to gain market share as organizations seek to reduce capital expenditure and access the latest AI infrastructure technologies without significant upfront investments. The flexibility and scalability of cloud deployments make them particularly attractive for organizations with variable or unpredictable AI workload requirements.

Edge infrastructure is emerging as a high-growth category driven by the need for real-time AI processing, data privacy requirements, and bandwidth optimization. Edge AI infrastructure solutions must balance performance requirements with power consumption, physical size, and environmental constraints.

Industry-specific solutions are gaining traction as AI infrastructure providers develop specialized offerings tailored to the unique requirements of specific vertical markets. These solutions often include pre-configured hardware, optimized software stacks, and industry-specific AI models and frameworks.

Enterprise organizations benefit from AI infrastructure solutions through improved operational efficiency, enhanced decision-making capabilities, and competitive advantages gained through AI-powered applications. The infrastructure provides the foundation for digital transformation initiatives and enables organizations to leverage their data assets more effectively.

Technology providers gain opportunities to participate in a rapidly growing market with strong demand for innovative solutions. The AI infrastructure market offers multiple entry points for companies with expertise in hardware design, software development, cloud services, or systems integration.

Research institutions benefit from access to powerful computational resources that enable advanced AI research and development. AI infrastructure solutions provide the computational scale necessary for training large models and conducting complex experiments that advance the state of artificial intelligence.

Government agencies can leverage AI infrastructure to improve public services, enhance security capabilities, and drive economic development through AI innovation. The infrastructure enables government organizations to implement AI applications for citizen services, regulatory compliance, and operational optimization.

Small and medium enterprises gain access to AI capabilities that were previously available only to large organizations with substantial IT resources. Cloud-based AI infrastructure solutions democratize access to advanced computational resources and enable smaller companies to compete with AI-powered applications.

System integrators and consultants benefit from growing demand for AI infrastructure deployment and management services. The complexity of AI infrastructure creates opportunities for specialized service providers who can help organizations design, implement, and optimize their AI infrastructure investments.

Strengths:

Weaknesses:

Opportunities:

Threats:

Convergence trends are reshaping the AI infrastructure landscape as traditional boundaries between computing, storage, and networking blur. Software-defined infrastructure approaches are enabling more flexible and efficient resource allocation, while hyperconverged systems are simplifying deployment and management of AI workloads.

Sustainability initiatives are driving development of more energy-efficient AI infrastructure solutions as organizations balance performance requirements with environmental responsibility. Green AI infrastructure technologies, including advanced cooling systems, renewable energy integration, and power-efficient processors, are becoming increasingly important.

Democratization of AI infrastructure is occurring through cloud services, pre-configured solutions, and simplified management tools that make advanced AI capabilities accessible to organizations without extensive technical expertise. This trend is expanding the market beyond traditional technology companies to include organizations across all industries.

Edge-cloud integration is creating hybrid architectures that optimize AI processing across distributed environments. These solutions balance the need for real-time processing at the edge with the computational power and storage capacity available in centralized cloud environments.

Automated infrastructure management is becoming standard as AI-powered tools take over routine configuration, optimization, and maintenance tasks. These self-managing systems reduce operational complexity and costs while improving performance and reliability.

Quantum-classical hybrid systems are emerging as early-stage solutions that combine traditional AI infrastructure with quantum computing capabilities for specific algorithms and applications. While still in development, these systems represent the future direction of high-performance AI infrastructure.

Recent technological breakthroughs in AI infrastructure include the development of neuromorphic computing architectures that mimic brain-like processing patterns, offering potential advantages in energy efficiency and learning capabilities. These developments represent fundamental advances in how AI infrastructure processes information and adapts to changing requirements.

Strategic partnerships between hardware manufacturers, cloud service providers, and software companies are creating more comprehensive AI infrastructure ecosystems. These collaborations are accelerating innovation and providing customers with integrated solutions that combine best-of-breed components from multiple vendors.

Investment activities in AI infrastructure startups and research initiatives have reached unprecedented levels, with venture capital and corporate investment driving innovation in specialized processors, software platforms, and deployment methodologies. MWR data indicates that AI infrastructure investment has grown by approximately 67% annually over the past three years.

Standardization efforts are progressing in areas such as AI model formats, container orchestration, and hardware interfaces, enabling greater interoperability and reducing vendor lock-in concerns. These standards are facilitating more flexible and portable AI infrastructure deployments.

Regulatory developments are beginning to address AI infrastructure requirements, particularly in areas such as data protection, algorithmic transparency, and environmental impact. These regulations are influencing infrastructure design and deployment practices across different geographic regions.

Open source initiatives are gaining momentum in AI infrastructure software, providing alternatives to proprietary solutions and fostering innovation through community collaboration. These projects are reducing barriers to AI infrastructure adoption and enabling customization for specific organizational needs.

Strategic recommendations for organizations considering AI infrastructure investments emphasize the importance of developing a comprehensive infrastructure strategy that aligns with long-term AI objectives and business goals. Organizations should prioritize flexibility and scalability in their infrastructure choices to accommodate evolving AI requirements and technological advances.

Technology selection should focus on solutions that provide strong ecosystem support, including software frameworks, development tools, and community resources. Organizations should evaluate not only current performance characteristics but also the vendor’s roadmap and commitment to ongoing innovation and support.

Skills development initiatives should be prioritized alongside infrastructure investments to ensure organizations can effectively deploy, manage, and optimize their AI infrastructure. This includes training existing IT staff and recruiting specialists with AI infrastructure expertise.

Pilot project approaches are recommended for organizations new to AI infrastructure, allowing them to gain experience and validate approaches before making larger investments. These pilot projects should focus on specific use cases with clear success metrics and scalability potential.

Vendor relationship management should emphasize partnerships rather than simple procurement relationships, as the complexity and rapid evolution of AI infrastructure require ongoing collaboration and support. Organizations should seek vendors who provide comprehensive support, training, and strategic guidance.

Security considerations must be integrated into AI infrastructure planning from the beginning, as AI systems often process sensitive data and make critical decisions. Security architecture should address both traditional IT security concerns and AI-specific vulnerabilities such as adversarial attacks and model poisoning.

Long-term projections for the AI infrastructure solutions market indicate continued strong growth driven by expanding AI adoption across industries and the development of more sophisticated AI applications. The market is expected to maintain growth rates of approximately 35% annually over the next five years as AI becomes increasingly central to business operations and competitive strategy.

Technological evolution will likely focus on improving energy efficiency, reducing costs, and simplifying deployment and management processes. Advances in chip design, cooling technologies, and software optimization will enable more powerful and accessible AI infrastructure solutions.

Market maturation will bring greater standardization, improved interoperability, and more predictable total cost of ownership models. This maturation will make AI infrastructure more accessible to a broader range of organizations and enable more strategic long-term planning.

Emerging applications in areas such as autonomous systems, personalized medicine, and climate modeling will drive demand for specialized AI infrastructure capabilities. These applications will require infrastructure solutions that can handle unique computational patterns and performance requirements.

Geographic expansion will see AI infrastructure adoption spreading to emerging markets as costs decrease and local expertise develops. This expansion will create new opportunities for infrastructure providers and drive innovation in cost-effective, locally-optimized solutions.

Integration trends will see AI infrastructure becoming more deeply embedded in overall IT architecture, with AI capabilities integrated into traditional enterprise systems and workflows. This integration will require infrastructure solutions that can seamlessly connect with existing systems while providing advanced AI processing capabilities.

The AI infrastructure solutions market represents a critical foundation for the global artificial intelligence revolution, providing the computational resources and technological capabilities necessary to support increasingly sophisticated AI applications across diverse industries. The market’s rapid growth reflects the strategic importance of AI technologies and the recognition that robust infrastructure is essential for successful AI implementation.

Market dynamics continue to evolve as technological advancement drives new capabilities while competitive pressures accelerate innovation and reduce costs. The convergence of hardware and software solutions, the expansion of cloud-based offerings, and the emergence of edge computing architectures are creating a more diverse and capable ecosystem of AI infrastructure options.

Future success in the AI infrastructure market will depend on the ability to balance performance, efficiency, and accessibility while addressing emerging challenges such as sustainability, security, and skills shortages. Organizations that can navigate these challenges while delivering innovative solutions will be well-positioned to capitalize on the continued growth and evolution of the AI infrastructure solutions market.

What is AI Infrastructure Solutions?

AI Infrastructure Solutions refer to the hardware and software components that support the development, deployment, and management of artificial intelligence applications. This includes cloud computing resources, data storage systems, and specialized hardware like GPUs designed for machine learning tasks.

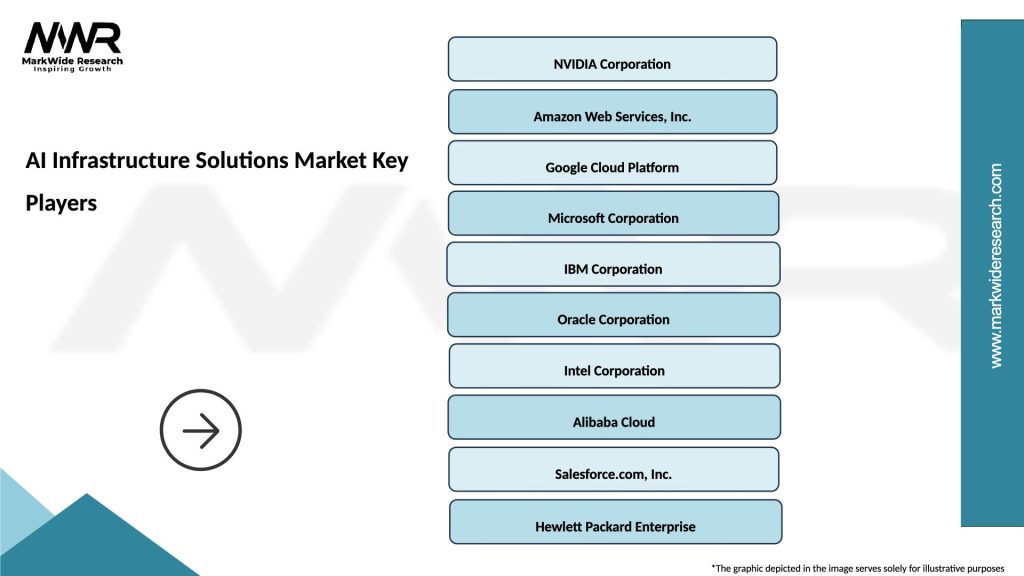

What are the key players in the AI Infrastructure Solutions Market?

Key players in the AI Infrastructure Solutions Market include NVIDIA, IBM, Google Cloud, and Microsoft Azure, which provide essential tools and platforms for AI development and deployment, among others.

What are the main drivers of growth in the AI Infrastructure Solutions Market?

The main drivers of growth in the AI Infrastructure Solutions Market include the increasing demand for AI applications across various industries, advancements in cloud computing technologies, and the need for efficient data processing capabilities.

What challenges does the AI Infrastructure Solutions Market face?

Challenges in the AI Infrastructure Solutions Market include high implementation costs, the complexity of integrating AI systems with existing infrastructure, and concerns regarding data privacy and security.

What opportunities exist in the AI Infrastructure Solutions Market?

Opportunities in the AI Infrastructure Solutions Market include the growing adoption of AI in sectors like healthcare, finance, and manufacturing, as well as the potential for innovations in edge computing and AI-driven analytics.

What trends are shaping the AI Infrastructure Solutions Market?

Trends shaping the AI Infrastructure Solutions Market include the rise of hybrid cloud solutions, increased focus on sustainability in data centers, and the development of more efficient AI algorithms that require less computational power.

AI Infrastructure Solutions Market

| Segmentation Details | Description |

|---|---|

| Deployment | Public Cloud, Private Cloud, Hybrid Cloud, On-Premises |

| Solution | Machine Learning, Data Analytics, Natural Language Processing, Computer Vision |

| End User | Healthcare Providers, Financial Institutions, Retail Chains, Manufacturing Firms |

| Technology | Edge Computing, Quantum Computing, Blockchain, Serverless Computing |

Please note: The segmentation can be entirely customized to align with our client’s needs.

Leading companies in the AI Infrastructure Solutions Market

Please note: This is a preliminary list; the final study will feature 18–20 leading companies in this market. The selection of companies in the final report can be customized based on our client’s specific requirements.

North America

o US

o Canada

o Mexico

Europe

o Germany

o Italy

o France

o UK

o Spain

o Denmark

o Sweden

o Austria

o Belgium

o Finland

o Turkey

o Poland

o Russia

o Greece

o Switzerland

o Netherlands

o Norway

o Portugal

o Rest of Europe

Asia Pacific

o China

o Japan

o India

o South Korea

o Indonesia

o Malaysia

o Kazakhstan

o Taiwan

o Vietnam

o Thailand

o Philippines

o Singapore

o Australia

o New Zealand

o Rest of Asia Pacific

South America

o Brazil

o Argentina

o Colombia

o Chile

o Peru

o Rest of South America

The Middle East & Africa

o Saudi Arabia

o UAE

o Qatar

o South Africa

o Israel

o Kuwait

o Oman

o North Africa

o West Africa

o Rest of MEA

Trusted by Global Leaders

Fortune 500 companies, SMEs, and top institutions rely on MWR’s insights to make informed decisions and drive growth.

ISO & IAF Certified

Our certifications reflect a commitment to accuracy, reliability, and high-quality market intelligence trusted worldwide.

Customized Insights

Every report is tailored to your business, offering actionable recommendations to boost growth and competitiveness.

Multi-Language Support

Final reports are delivered in English and major global languages including French, German, Spanish, Italian, Portuguese, Chinese, Japanese, Korean, Arabic, Russian, and more.

Unlimited User Access

Corporate License offers unrestricted access for your entire organization at no extra cost.

Free Company Inclusion

We add 3–4 extra companies of your choice for more relevant competitive analysis — free of charge.

Post-Sale Assistance

Dedicated account managers provide unlimited support, handling queries and customization even after delivery.

GET A FREE SAMPLE REPORT

This free sample study provides a complete overview of the report, including executive summary, market segments, competitive analysis, country level analysis and more.

ISO AND IAF CERTIFIED

GET A FREE SAMPLE REPORT

This free sample study provides a complete overview of the report, including executive summary, market segments, competitive analysis, country level analysis and more.

ISO AND IAF CERTIFIED

Suite #BAA205 Torrance, CA 90503 USA

24/7 Customer Support

Email us at