444 Alaska Avenue

Suite #BAA205 Torrance, CA 90503 USA

+1 424 999 9627

24/7 Customer Support

sales@markwideresearch.com

Email us at

Suite #BAA205 Torrance, CA 90503 USA

24/7 Customer Support

Email us at

Corporate User License

Unlimited User Access, Post-Sale Support, Free Updates, Reports in English & Major Languages, and more

$2450

The United Kingdom data center networking market represents a critical infrastructure segment experiencing unprecedented growth driven by digital transformation initiatives across industries. Cloud adoption has accelerated significantly, with organizations migrating workloads to hybrid and multi-cloud environments, creating substantial demand for advanced networking solutions. The market encompasses various networking components including switches, routers, network security appliances, and software-defined networking solutions that enable seamless data flow within modern data centers.

Digital transformation initiatives across the UK have intensified following the pandemic, with businesses recognizing the importance of robust data center infrastructure. The market is witnessing a 12.5% annual growth rate in network equipment deployment, reflecting the increasing complexity of enterprise IT environments. Edge computing adoption is particularly driving demand for distributed networking solutions, as organizations seek to reduce latency and improve application performance for end users.

Hyperscale data centers are emerging as significant contributors to market expansion, with major cloud service providers establishing facilities across the UK. These facilities require sophisticated networking architectures capable of handling massive data volumes and supporting diverse workloads. The integration of artificial intelligence and machine learning capabilities into networking equipment is creating new opportunities for intelligent network management and automated optimization.

The United Kingdom data center networking market refers to the comprehensive ecosystem of networking hardware, software, and services that enable connectivity, data transmission, and communication within data center facilities across the UK. This market encompasses all networking components required to create efficient, scalable, and secure data center environments that support modern enterprise computing requirements.

Data center networking includes physical infrastructure such as ethernet switches, routers, load balancers, and network security appliances, as well as virtual networking solutions including software-defined networking platforms and network function virtualization technologies. The market also covers network management software, monitoring tools, and professional services that ensure optimal network performance and reliability.

Modern data center networking architectures are designed to support cloud computing, virtualization, containerization, and emerging technologies like edge computing and Internet of Things applications. These networks must provide high bandwidth, low latency, and exceptional reliability while maintaining security and enabling efficient resource utilization across distributed computing environments.

Market dynamics in the UK data center networking sector are characterized by rapid technological evolution and increasing demand for high-performance networking solutions. Organizations are investing heavily in network infrastructure modernization to support digital transformation initiatives, with software-defined networking adoption reaching 38% penetration among enterprise data centers. The shift toward hybrid cloud architectures is driving demand for flexible, programmable networking solutions that can adapt to changing business requirements.

Key market drivers include the proliferation of data-intensive applications, increasing adoption of cloud services, and growing emphasis on network security and compliance. The rise of 5G networks is creating new opportunities for data center networking providers, as telecommunications companies upgrade their infrastructure to support next-generation services. Edge computing deployment is accelerating, with organizations seeking to process data closer to end users to reduce latency and improve application performance.

Competitive landscape features established networking equipment manufacturers alongside emerging software-defined networking specialists. Innovation focuses on developing intelligent networking solutions that incorporate artificial intelligence for automated network management, predictive maintenance, and performance optimization. The market is experiencing consolidation as companies seek to offer comprehensive networking portfolios that address diverse customer requirements.

Technology adoption patterns reveal significant shifts in data center networking preferences, with organizations prioritizing flexibility, scalability, and automation capabilities. The following insights highlight critical market developments:

Digital transformation initiatives across UK enterprises are creating substantial demand for advanced data center networking solutions. Organizations are modernizing their IT infrastructure to support cloud-first strategies, requiring networks that can seamlessly integrate on-premises and cloud environments. The acceleration of remote work has intensified the need for robust, scalable networking infrastructure capable of supporting distributed workforces and applications.

Cloud adoption continues to drive networking infrastructure investments, with organizations requiring sophisticated connectivity solutions to support hybrid and multi-cloud deployments. The growth of Software-as-a-Service applications is creating demand for high-performance networks that can deliver consistent user experiences across diverse applications and services. Data sovereignty requirements are influencing network design decisions as organizations seek to maintain control over data location and access.

Regulatory compliance requirements are driving investments in network security and monitoring capabilities. The implementation of GDPR and other data protection regulations has created demand for networking solutions that provide comprehensive visibility and control over data flows. Financial services organizations are particularly focused on network resilience and security to meet stringent regulatory requirements and protect sensitive customer data.

Emerging technologies including artificial intelligence, machine learning, and Internet of Things are creating new networking requirements. These technologies generate massive amounts of data that require high-bandwidth, low-latency networks for efficient processing and analysis. The deployment of 5G networks is creating opportunities for data center networking providers to support telecommunications infrastructure modernization.

High implementation costs represent a significant barrier to data center networking modernization, particularly for small and medium-sized enterprises. The complexity of modern networking solutions requires substantial investments in both hardware and professional services, creating budget constraints for organizations with limited IT resources. Legacy system integration challenges add additional costs and complexity to network upgrade projects.

Skills shortage in networking expertise is constraining market growth, as organizations struggle to find qualified professionals capable of designing, implementing, and managing advanced networking solutions. The rapid evolution of networking technologies requires continuous training and certification, creating ongoing challenges for IT departments. Vendor lock-in concerns are causing organizations to hesitate in adopting proprietary networking solutions that may limit future flexibility.

Security concerns related to software-defined networking and network virtualization are creating resistance among security-conscious organizations. The increased complexity of modern networking architectures can create new attack vectors and security vulnerabilities that require specialized expertise to address. Compliance challenges associated with virtualized networking environments are causing delays in adoption among regulated industries.

Economic uncertainty is influencing IT investment decisions, with organizations deferring major infrastructure upgrades in favor of maintaining existing systems. The impact of Brexit continues to create uncertainty in technology procurement and vendor relationships, affecting long-term planning for networking infrastructure investments. Supply chain disruptions have impacted equipment availability and pricing, creating additional challenges for network deployment projects.

Edge computing deployment presents significant opportunities for data center networking providers, as organizations seek to process data closer to end users and devices. The proliferation of IoT devices is creating demand for distributed networking solutions that can support massive numbers of connected endpoints while maintaining security and performance. 5G network rollout is driving investments in edge data centers that require specialized networking infrastructure.

Artificial intelligence integration into networking solutions offers opportunities for providers to differentiate their offerings through intelligent automation and predictive analytics capabilities. Machine learning algorithms can optimize network performance, predict failures, and automate routine management tasks, creating value for customers while reducing operational complexity. Network analytics platforms are becoming essential for understanding application performance and user experience.

Sustainability initiatives are driving demand for energy-efficient networking solutions that can reduce data center power consumption and carbon footprint. Organizations are seeking green networking technologies that provide high performance while minimizing environmental impact. The development of liquid cooling solutions for high-density networking equipment is creating new market opportunities.

Industry-specific solutions offer opportunities for networking providers to develop specialized offerings for sectors such as healthcare, financial services, and manufacturing. The unique requirements of these industries create demand for customized networking solutions that address specific compliance, security, and performance requirements. Vertical market expertise is becoming a key differentiator for networking solution providers.

Technological convergence is reshaping the data center networking landscape, with traditional boundaries between networking, storage, and compute infrastructure becoming increasingly blurred. Hyper-converged infrastructure solutions are gaining popularity as organizations seek simplified management and reduced complexity in their data center environments. The integration of networking and security functions is creating demand for comprehensive platforms that address multiple infrastructure requirements.

Vendor ecosystem evolution is characterized by strategic partnerships and acquisitions as companies seek to expand their networking portfolios and capabilities. Traditional hardware vendors are investing in software-defined networking capabilities, while software companies are developing hardware partnerships to deliver complete solutions. Open networking initiatives are creating opportunities for disaggregated solutions that separate hardware and software components.

Customer buying patterns are shifting toward consumption-based models and as-a-service offerings that provide greater flexibility and predictable costs. Organizations are increasingly interested in networking-as-a-service models that reduce capital expenditure requirements and provide access to latest technologies. The adoption of subscription-based licensing models is changing how networking solutions are procured and managed.

Innovation cycles are accelerating as networking providers compete to deliver next-generation capabilities that address emerging customer requirements. The development of intent-based networking solutions is enabling more intuitive network management through natural language interfaces and automated policy enforcement. Network programmability is becoming essential for supporting dynamic application requirements and automated infrastructure management.

Comprehensive market analysis was conducted using multiple research methodologies to ensure accuracy and reliability of findings. Primary research involved extensive interviews with industry executives, technology vendors, system integrators, and end-user organizations across various sectors in the UK. These interviews provided insights into market trends, technology adoption patterns, and future requirements that shape networking investment decisions.

Secondary research encompassed analysis of industry reports, financial statements, technology specifications, and regulatory documents to understand market dynamics and competitive positioning. Market sizing was performed through bottom-up analysis of technology segments, application areas, and regional deployment patterns. Trend analysis examined historical data to identify growth patterns and project future market development.

Data validation processes included cross-referencing information from multiple sources and conducting follow-up interviews to verify key findings. Expert panels comprising industry specialists reviewed research findings to ensure accuracy and completeness of market analysis. Statistical modeling was employed to project market growth rates and identify key performance indicators that influence market development.

Continuous monitoring of market developments ensures research findings remain current and relevant. Technology assessment evaluates emerging networking solutions and their potential impact on market dynamics. Competitive intelligence tracks vendor strategies, product developments, and market positioning to provide comprehensive understanding of the competitive landscape.

London metropolitan area dominates the UK data center networking market, accounting for approximately 45% market share due to the concentration of financial services organizations, technology companies, and hyperscale data centers. The region benefits from excellent connectivity infrastructure, skilled workforce availability, and proximity to major business centers. Canary Wharf and surrounding areas host numerous data centers that require sophisticated networking solutions to support high-frequency trading and financial services applications.

Manchester and surrounding regions represent the second-largest market segment, with growing adoption of data center networking solutions driven by the expansion of digital businesses and cloud service providers. The region offers cost advantages compared to London while maintaining good connectivity and infrastructure. Government initiatives to promote digital transformation in northern England are creating additional opportunities for networking solution providers.

Scotland is experiencing significant growth in data center networking investments, particularly in renewable energy-powered facilities that attract environmentally conscious organizations. The availability of green energy and favorable climate conditions for cooling are driving data center development in the region. Edinburgh and Glasgow are emerging as important secondary markets for data center networking solutions.

Regional distribution patterns show increasing decentralization as organizations deploy edge computing infrastructure closer to end users. Tier 2 cities are experiencing growth in data center networking investments as businesses seek to reduce latency and improve application performance. The development of 5G networks is creating demand for distributed networking infrastructure across multiple regions to support next-generation services and applications.

Market leadership is characterized by intense competition among established networking equipment manufacturers and emerging software-defined networking specialists. The competitive landscape features both global technology giants and specialized UK-based solution providers that offer localized expertise and support.

Competitive strategies focus on developing integrated solutions that combine networking hardware, software, and services to address complete customer requirements. Innovation investments are concentrated on artificial intelligence, machine learning, and automation capabilities that differentiate offerings in an increasingly commoditized market.

By Component:

By Technology:

By End-User Industry:

Ethernet Switching remains the largest category within the UK data center networking market, driven by the fundamental requirement for high-speed connectivity within data center environments. 100 Gigabit Ethernet adoption is accelerating as organizations upgrade infrastructure to support bandwidth-intensive applications and workloads. The transition to 400 Gigabit Ethernet is beginning among hyperscale data centers and high-performance computing environments.

Software-Defined Networking represents the fastest-growing category, with organizations seeking programmable infrastructure that can adapt to changing application requirements. SDN controllers are becoming essential for managing complex, multi-vendor networking environments. The integration of artificial intelligence into SDN platforms is enabling predictive analytics and automated optimization capabilities.

Network Security solutions are experiencing strong growth as organizations prioritize protection against cyber threats and compliance with data protection regulations. Zero-trust networking architectures are gaining adoption as traditional perimeter-based security models prove inadequate for modern data center environments. The integration of security analytics into networking platforms is providing enhanced threat detection and response capabilities.

Professional Services demand is increasing as organizations require specialized expertise to design, implement, and manage complex networking solutions. Managed services are becoming popular as organizations seek to reduce operational complexity while accessing latest networking technologies. The shortage of networking skills is driving demand for outsourced network management services.

Technology Vendors benefit from expanding market opportunities driven by digital transformation initiatives and cloud adoption across UK enterprises. The growing complexity of networking requirements creates demand for innovative solutions that can differentiate vendors in competitive markets. Recurring revenue opportunities through software licensing and managed services are improving business model predictability and profitability.

System Integrators are experiencing increased demand for networking expertise as organizations require assistance with complex infrastructure deployments and migrations. The shortage of internal networking skills creates opportunities for specialized service providers to deliver value-added services. Partnership opportunities with technology vendors enable integrators to expand their solution portfolios and market reach.

End-User Organizations benefit from improved network performance, enhanced security, and reduced operational complexity through modern networking solutions. Automation capabilities reduce manual management tasks and improve network reliability. The flexibility of software-defined networking enables organizations to adapt quickly to changing business requirements and application demands.

Cloud Service Providers can leverage advanced networking solutions to differentiate their offerings and improve service quality for customers. Network programmability enables rapid service provisioning and customization to meet specific customer requirements. The integration of networking and cloud platforms creates opportunities for comprehensive infrastructure-as-a-service offerings.

Strengths:

Weaknesses:

Opportunities:

Threats:

Artificial Intelligence Integration is transforming data center networking through intelligent automation, predictive analytics, and self-healing network capabilities. AI-driven networking platforms can automatically optimize performance, detect anomalies, and implement corrective actions without human intervention. Machine learning algorithms are being embedded into networking equipment to enable continuous learning and improvement of network operations.

Edge Computing Proliferation is driving demand for distributed networking architectures that can support processing closer to data sources and end users. Multi-access edge computing deployments require specialized networking solutions that can handle diverse workloads and connectivity requirements. The integration of 5G networks with edge computing is creating new opportunities for ultra-low latency applications and services.

Network Security Convergence is leading to the integration of security functions directly into networking infrastructure, eliminating the need for separate security appliances. Secure access service edge architectures are gaining adoption as organizations seek to simplify security management while improving protection. Zero-trust networking principles are being embedded into data center architectures to enhance security posture.

Sustainability Focus is driving development of energy-efficient networking solutions that reduce power consumption and environmental impact. Green networking initiatives include the use of renewable energy, improved cooling systems, and energy-efficient hardware designs. Organizations are increasingly considering carbon footprint as a factor in networking solution selection and data center location decisions.

Strategic Acquisitions are reshaping the competitive landscape as networking vendors seek to expand their capabilities and market reach. Recent acquisitions have focused on software-defined networking, network security, and artificial intelligence technologies. Vertical integration strategies are enabling vendors to offer comprehensive solutions that address complete customer requirements from hardware to software and services.

Technology Partnerships are becoming essential for delivering integrated solutions that combine networking, compute, and storage capabilities. Ecosystem collaboration between traditional networking vendors and cloud service providers is creating new go-to-market opportunities. Open networking initiatives are promoting interoperability and reducing vendor lock-in concerns among customers.

Product Innovation is accelerating with the introduction of next-generation networking platforms that incorporate artificial intelligence, machine learning, and advanced automation capabilities. Intent-based networking solutions are enabling more intuitive network management through natural language interfaces and automated policy enforcement. The development of quantum-safe networking solutions is addressing future security requirements.

Market Expansion initiatives include the establishment of new data centers and networking infrastructure across the UK to support growing demand for cloud services and edge computing. Hyperscale investments by major cloud service providers are driving demand for high-performance networking solutions. Government digitalization programs are creating opportunities for networking solution providers in the public sector.

Investment Prioritization should focus on software-defined networking and automation capabilities that can provide long-term competitive advantages and operational efficiency improvements. Organizations should evaluate total cost of ownership rather than initial purchase price when selecting networking solutions. MarkWide Research analysis indicates that organizations investing in programmable networking infrastructure achieve 25% better operational efficiency compared to traditional networking approaches.

Vendor Selection criteria should emphasize ecosystem compatibility, roadmap alignment, and long-term viability rather than focusing solely on current product capabilities. Organizations should prioritize vendors that demonstrate commitment to open standards and interoperability to avoid vendor lock-in situations. Professional services capabilities should be evaluated as part of vendor selection to ensure successful implementation and ongoing support.

Skills Development initiatives are essential for organizations to maximize the value of networking investments and maintain competitive advantage. Training programs should focus on software-defined networking, network automation, and security integration capabilities. Partnership strategies with managed service providers can help organizations access specialized expertise while building internal capabilities.

Future Planning should consider emerging technologies such as quantum networking, 6G communications, and advanced artificial intelligence that may impact networking requirements in the coming years. Organizations should develop technology roadmaps that align networking investments with business objectives and digital transformation initiatives. Sustainability considerations should be integrated into networking strategy to address environmental concerns and regulatory requirements.

Market evolution will be characterized by continued growth in software-defined networking adoption, with SDN penetration expected to reach 65% of enterprise data centers within the next five years. The integration of artificial intelligence and machine learning capabilities will become standard features in networking platforms, enabling autonomous network operations and predictive maintenance. Edge computing deployment will accelerate, creating demand for distributed networking solutions that can support diverse application requirements.

Technology convergence will continue to blur the boundaries between networking, security, and compute infrastructure, leading to the development of comprehensive platforms that address multiple infrastructure requirements. Network function virtualization will mature, enabling organizations to replace dedicated hardware appliances with software-based solutions that provide greater flexibility and cost efficiency. The adoption of intent-based networking will simplify network management and enable more agile responses to changing business requirements.

Industry transformation will be driven by the proliferation of Internet of Things devices, the rollout of 5G networks, and the continued growth of cloud computing. Quantum networking technologies will begin to emerge, requiring organizations to prepare for post-quantum cryptography and security requirements. MWR projections indicate that the market will experience sustained growth driven by digital transformation initiatives and emerging technology adoption across all industry sectors.

Competitive dynamics will intensify as traditional networking vendors compete with cloud service providers and software companies entering the networking market. Consolidation is expected to continue as companies seek to build comprehensive portfolios that can address diverse customer requirements. The importance of ecosystem partnerships will increase as no single vendor can provide all the capabilities required for modern data center networking environments.

The United Kingdom data center networking market stands at a pivotal juncture, driven by unprecedented digital transformation initiatives and the accelerating adoption of cloud computing technologies. Market dynamics indicate robust growth potential fueled by emerging technologies such as edge computing, artificial intelligence, and 5G networks that require sophisticated networking infrastructure to deliver optimal performance and reliability.

Technology evolution toward software-defined networking and intelligent automation is reshaping how organizations design, deploy, and manage their data center networks. The integration of security capabilities directly into networking platforms is addressing growing cybersecurity concerns while simplifying infrastructure management. Sustainability initiatives are becoming increasingly important as organizations seek to reduce their environmental impact while maintaining high-performance networking capabilities.

Strategic opportunities abound for technology vendors, system integrators, and end-user organizations that can effectively navigate the complex landscape of networking technologies and market requirements. Success will depend on the ability to deliver integrated solutions that address complete customer requirements while providing the flexibility and scalability needed to support future growth and innovation. The United Kingdom data center networking market is positioned for continued expansion as digital transformation accelerates across all sectors of the economy.

What is Data Center Networking?

Data Center Networking refers to the technologies and infrastructure that connect servers, storage systems, and networking devices within a data center. It encompasses various components such as switches, routers, and cabling that facilitate data transfer and communication.

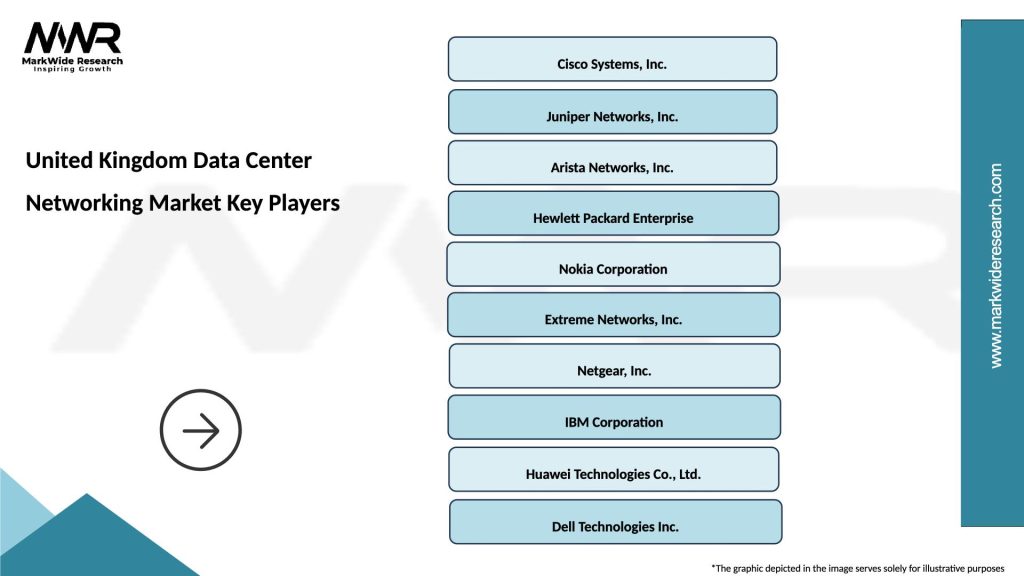

What are the key players in the United Kingdom Data Center Networking Market?

Key players in the United Kingdom Data Center Networking Market include Cisco Systems, Arista Networks, and Juniper Networks, among others. These companies provide a range of networking solutions tailored for data centers, enhancing performance and reliability.

What are the main drivers of growth in the United Kingdom Data Center Networking Market?

The main drivers of growth in the United Kingdom Data Center Networking Market include the increasing demand for cloud services, the rise of big data analytics, and the need for enhanced network security. These factors are pushing organizations to invest in advanced networking solutions.

What challenges does the United Kingdom Data Center Networking Market face?

Challenges in the United Kingdom Data Center Networking Market include the high costs associated with upgrading existing infrastructure and the complexity of integrating new technologies. Additionally, the rapid pace of technological change can make it difficult for companies to keep up.

What opportunities exist in the United Kingdom Data Center Networking Market?

Opportunities in the United Kingdom Data Center Networking Market include the growing adoption of software-defined networking (SDN) and network function virtualization (NFV). These technologies offer flexibility and scalability, allowing businesses to optimize their networking resources.

What trends are shaping the United Kingdom Data Center Networking Market?

Trends shaping the United Kingdom Data Center Networking Market include the shift towards automation and artificial intelligence in network management, as well as the increasing focus on energy efficiency and sustainability. These trends are influencing how data centers are designed and operated.

United Kingdom Data Center Networking Market

| Segmentation Details | Description |

|---|---|

| Product Type | Switches, Routers, Firewalls, Load Balancers |

| Technology | Ethernet, Fiber Channel, InfiniBand, MPLS |

| End User | Telecommunications, Cloud Service Providers, Enterprises, Government |

| Deployment | On-Premises, Hybrid, Multi-Cloud, Colocation |

Please note: The segmentation can be entirely customized to align with our client’s needs.

Leading companies in the United Kingdom Data Center Networking Market

Please note: This is a preliminary list; the final study will feature 18–20 leading companies in this market. The selection of companies in the final report can be customized based on our client’s specific requirements.

Trusted by Global Leaders

Fortune 500 companies, SMEs, and top institutions rely on MWR’s insights to make informed decisions and drive growth.

ISO & IAF Certified

Our certifications reflect a commitment to accuracy, reliability, and high-quality market intelligence trusted worldwide.

Customized Insights

Every report is tailored to your business, offering actionable recommendations to boost growth and competitiveness.

Multi-Language Support

Final reports are delivered in English and major global languages including French, German, Spanish, Italian, Portuguese, Chinese, Japanese, Korean, Arabic, Russian, and more.

Unlimited User Access

Corporate License offers unrestricted access for your entire organization at no extra cost.

Free Company Inclusion

We add 3–4 extra companies of your choice for more relevant competitive analysis — free of charge.

Post-Sale Assistance

Dedicated account managers provide unlimited support, handling queries and customization even after delivery.

GET A FREE SAMPLE REPORT

This free sample study provides a complete overview of the report, including executive summary, market segments, competitive analysis, country level analysis and more.

ISO AND IAF CERTIFIED

GET A FREE SAMPLE REPORT

This free sample study provides a complete overview of the report, including executive summary, market segments, competitive analysis, country level analysis and more.

ISO AND IAF CERTIFIED

Suite #BAA205 Torrance, CA 90503 USA

24/7 Customer Support

Email us at